Prompty for VS Code!

I love developing and prototyping AI solutions. For that reason, I’m always looking for new tools that help me streamline this process. What should I say? I have found a new friend in VS Code. Yes, I recently discovered Prompty – a new extension for VS Code developed by Microsoft. But what is the difference of Prompty to other extensions such as Prompt flow for VS Code?

The answer is simple. Prompty standardizes how I can specify, develop, run, and orchestrate my LLM prompts in a single asset. In other words, I can create a single file that contains my LLM specification and my prompt together. Moreover, this developer-friendly interface allows me to integrate my input data in a Jinja like template format into my prompt.

Wait, that is not everything. I also can preview my requests to the LLM in VS Code in an end user friendly way and execute my created prompt directly from the Prompty file. In addition, I can inspect the HTTP calls to my LLM. Finally, this amazing extension helps me to generate code for integration into popular frameworks such as Prompt flow, Langchain, and Semantic Kernel.

Are you curious? No problem, starting with Prompty is not rocket science!

Start with Prompty in VS Code

First, I must install the extension in my VS Code before I can use Prompty. I navigate to my extensions, enter Prompty and click on Install:

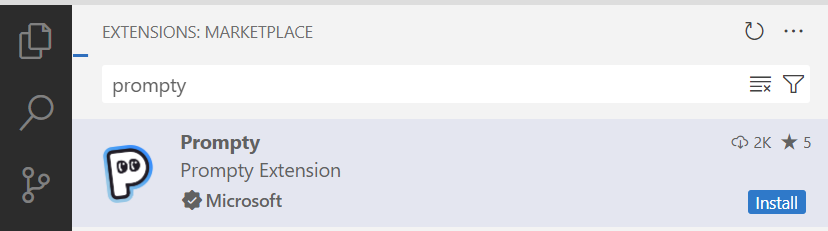

When my extension is ready, I go back to my file explorer in VS Code. Here I right-click on my src folder and navigate to New Prompty in the menu:

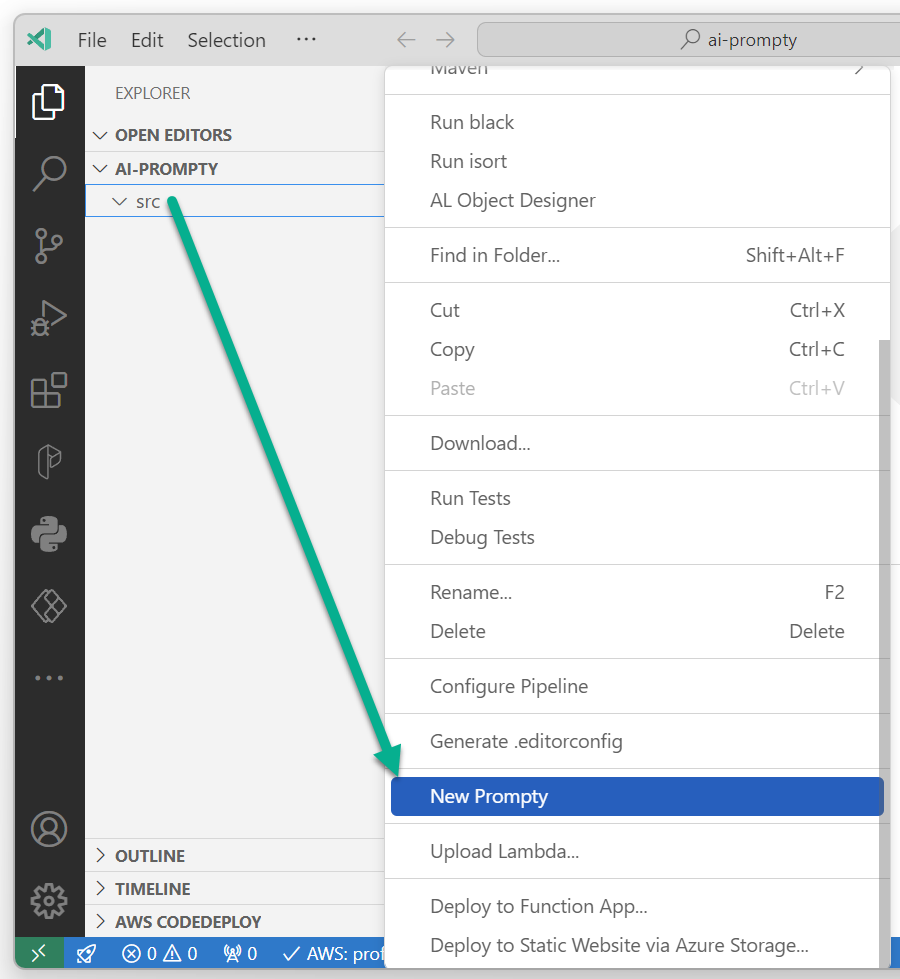

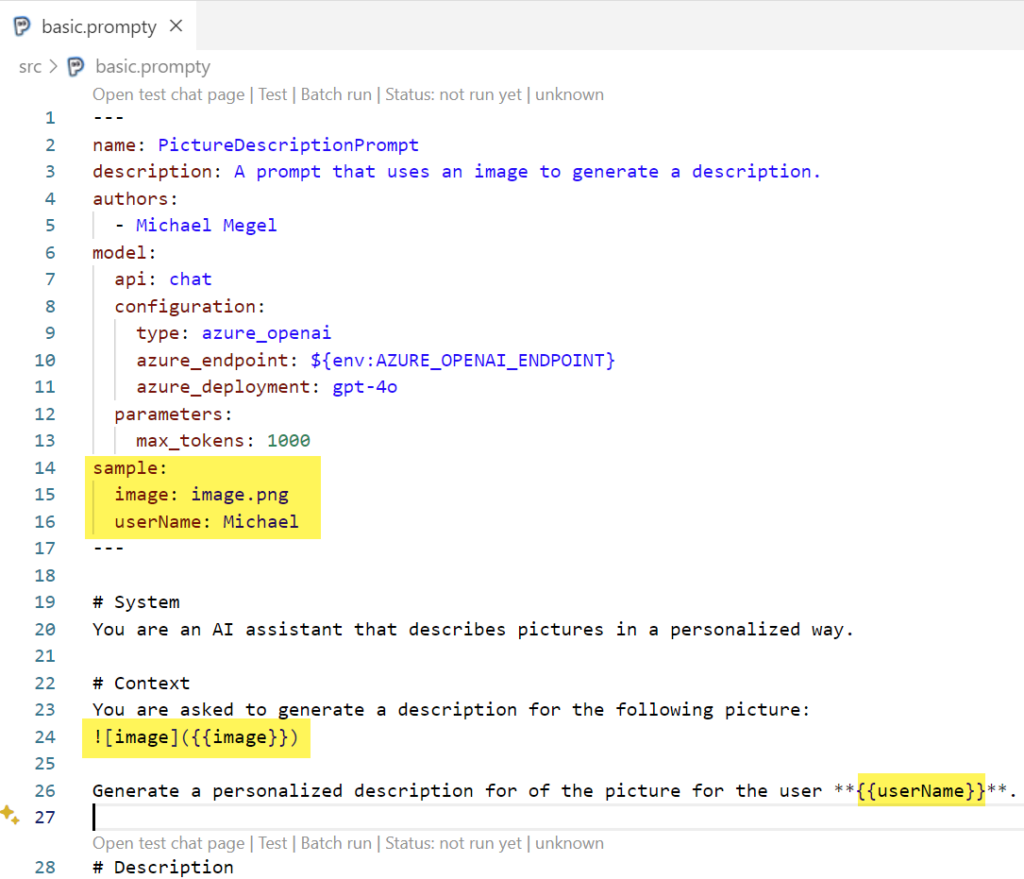

Perfect, a new file basic.prompty is generated. This Prompty file contains an example configuration and a prompt:

You see at the top the model configuration part. Here I can describe the parameters of my used endpoint, configure the tokens, and specify input and sample parameters. Below this configuration is my prompt. Moreover, my prompt contains Jinja like placeholders for my parameters.

Okay, that’s quite simple. But I think defining my own prompt helps to discover more features of Prompty together…

Prompty in Action

Now, I want to see the real power of Prompty. Moreover, I will use the GPT-4o model hosted in Azure which integrates text and images in a single model. This means, I write a prompt that passes an image to my model:

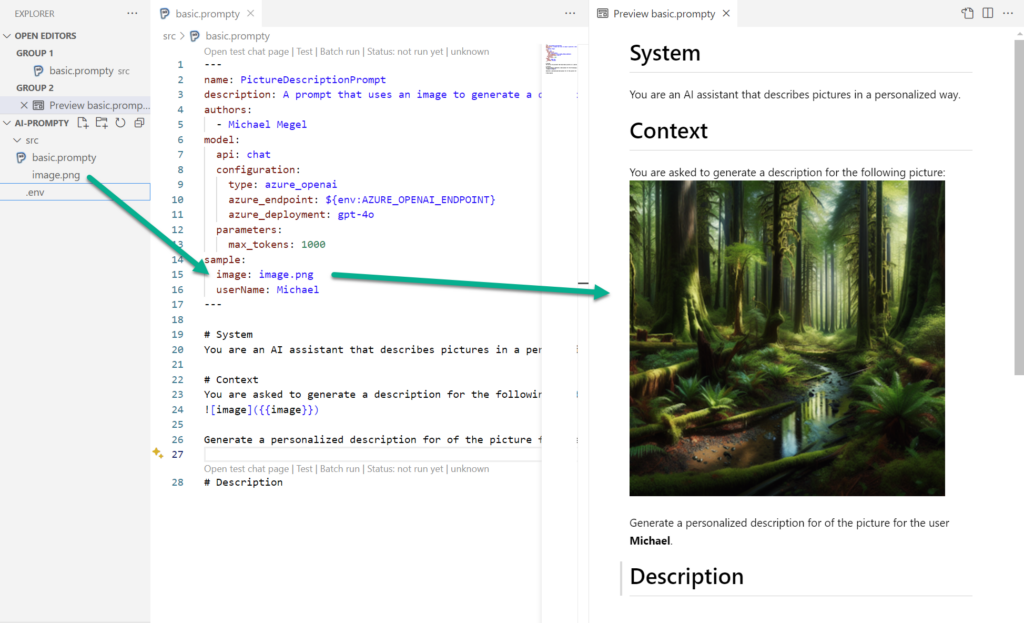

You see, I define my image directly directly underneath the sample section within the parameters image. Furthermore, I add userName to get a personalized answer from the LLM. Both parameters act as input variables. You see again, this is straightforward. In addition, I have used my parameters variables directly in my prompt with the curly brackets.

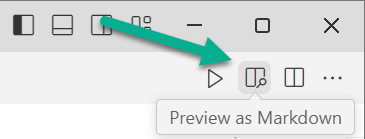

However, my current prompt in Prompty is still a mix of Markdown notations and variables. Is there a better presentation? Yes, I can switch to the preview. This means, I toggle on Preview as Markdown in my Prompty file in VS Code:

As result, my screen splits and I see directly a preview of my prompt as markdown. You see, the image is used as parameter in Prompty and directly included as picture into the Markdown preview:

Thats cool, because as a developer, I see what is passed to my LLM. This is definitely end user friendly!

Configure Prompty Runtime

You might discover also the .env file in my VS Code. This is one option to configure environment variables for Prompty:

AZURE_OPENAI_ENDPOINT = https://my-endpoint.openai.azure.com/

AZURE_OPENAI_API_KEY = ...Another option is to set up this information in the VS Code Settings:

"prompty.modelConfigurations": [

{

"name": "gpt-4o",

"type": "azure_openai",

"api_version": "2024-02-15-preview",

"azure_endpoint": "https://my-endpoint.openai.azure.com/",

"azure_deployment": "gpt-4o",

"api_key": "..."

}

]Note: Check the API versions directly in Microsoft Docs (https://learn.microsoft.com/en-us/azure/ai-services/openai/api-version-deprecation#latest-preview-api-releases)

Execute Prompty

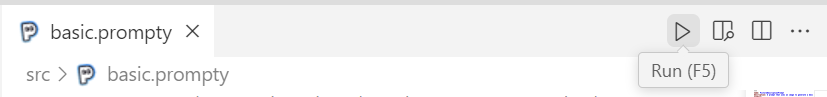

Everything is ready, this means I run my Prompty:

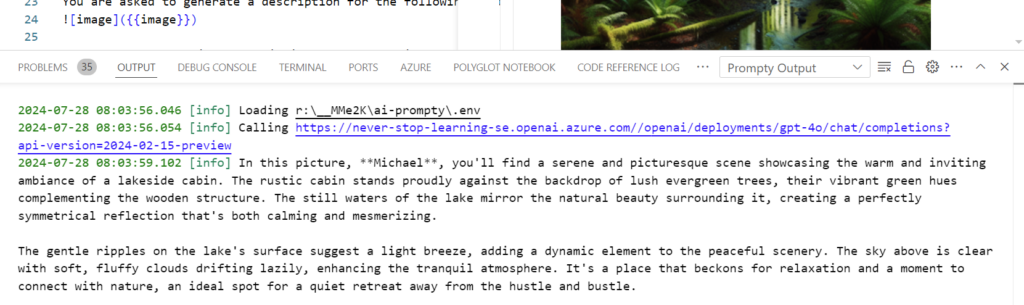

This takes a some seconds until the result of my prompt is directly presented to me as Prompty Output in VS Code:

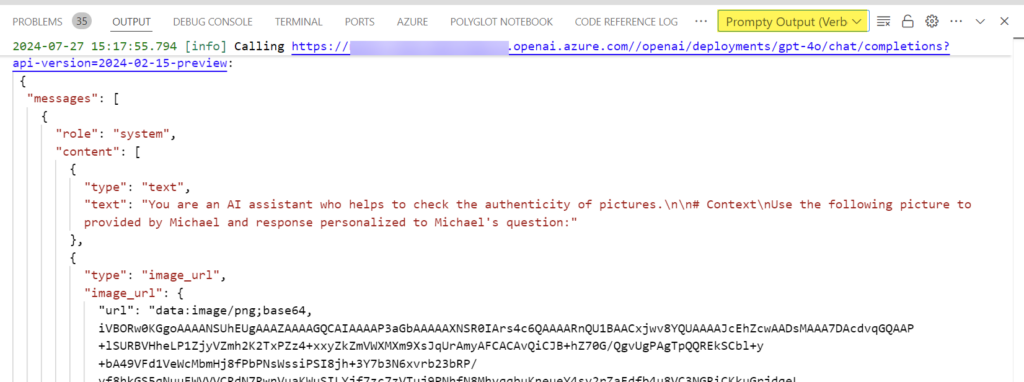

Wow, that is fantastic! But more impressive to me is the Prompty Output (Verbose) where I can see the API request and response of my execution:

Integrating Prompty

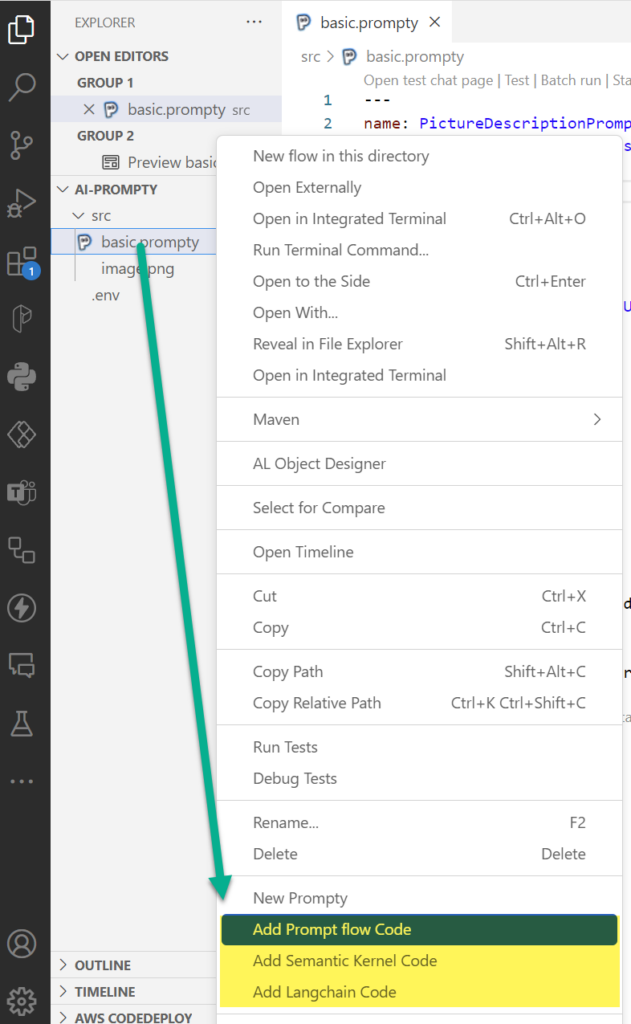

Last but not least, let me show you how easy it is to use my Prompty files from other frameworks such as Prompt flow, LangChain, and Semantic Kernel. I right-click directly in VS Code to my Prompty file and this menu opens:

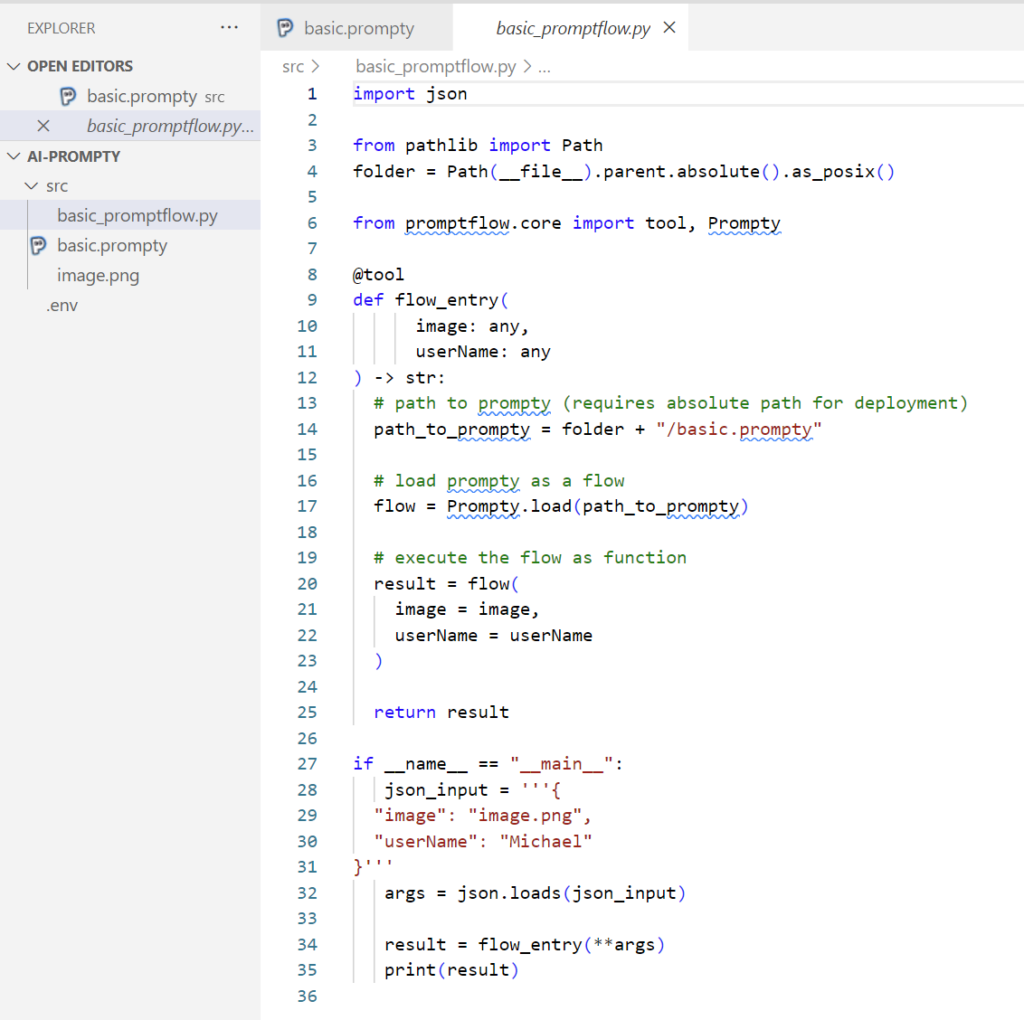

I use Prompt flow a lot in Azure AI Studio. Therefore, I click on Add Prompt flow Code, then my Prompty extension directly generates this code for me:

That is awesome! Prompty has generated a file that I can integrate easily into Prompt flow. However, that works also with LangChain or Semantic Kernel.

Summary

I’m impressed! In my opinion, Prompty is a game changer for my AI development work. This is because Prompty simplifies my development process of AI solutions by combining configuration, example data and prompt into a single file. In other words, that helps me as a developer to keep the focus on the AI solution.

Furthermore, I like the preview as MarkDown of the resolved prompt including the sample data. This helps me as an end users to see and understand what is sent to the LLM. In addition, I can execute my Prompty file directly in VS Code. Moreover, I can inspect the HTTP requests and responses in the output log. Finally, it’s easy to integrate my Prompty files with other frameworks such as Prompt flow, LangChain, and Semantic Kernel. I right-click on my Prompty file and generate a wrapper code for my target framework.

Overall, Prompty lets me manage everything in one place. It’s super simple to set up and use. This makes my workflow much smoother and more efficient.

References:

- GitHub Repository: https://github.com/microsoft/prompty

- VS Code Extension: https://marketplace.visualstudio.com/items?itemName=ms-toolsai.prompty

- Prompty in Prompt flow: https://microsoft.github.io/promptflow/tutorials/prompty-quickstart.html