Power Automate Flows as API

Did you ever create an API based on Power Automated Flows as endpoints? You might be wondering, why would you do that? Trust me sometimes there is a reason. Furthermore, with the growing Citizen Developer Community and the amount of Power Platform solutions being created, this will become increasingly important in the future.

If you have joined my session Power Automate Flows as API at the Azure Developer Community Day 2022 at Microsoft in Munich, you already know the story. If not, no problem, because here are some details…

Decoupling Power Platform Solutions

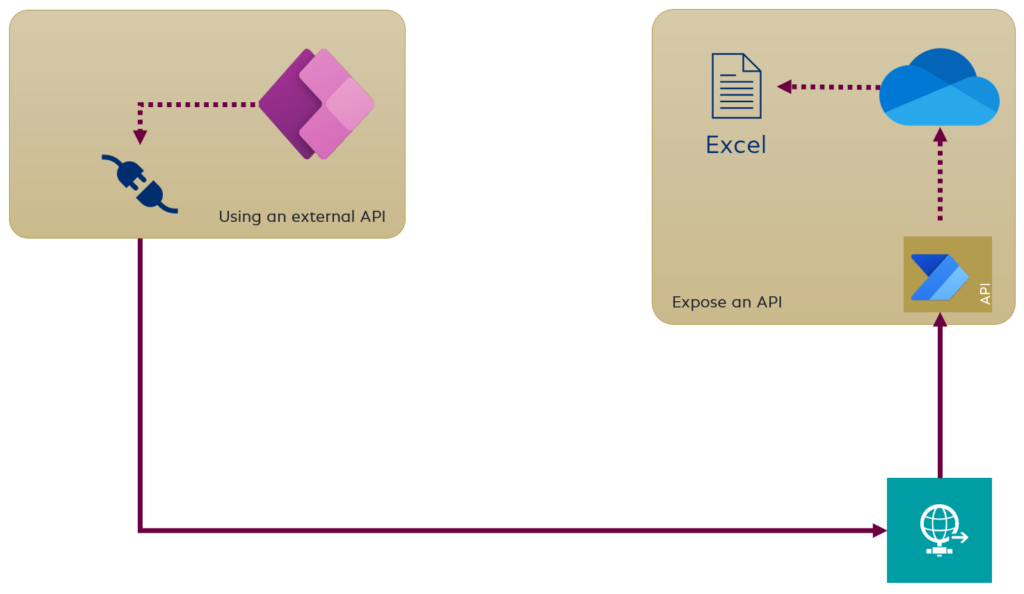

Let’s imagine: I have 2 Power Platform solutions. My first solution (solution 1) handle all business logic. To keep it simple, this solution uses a Power Automate Flow and access the data in my Excel file stored in OneDrive. My second solution (solution 2) contains a Canvas App, where I want to use the data from solution 1. Both solutions are in different environments and have different Makers.

To allow the communication between both solutions, I use a Power Automate Flow with a “Request Trigger” and expose an Http endpoint as “API” my second solution. Solution 2 uses this API with a custom connector. In detail the custom connector is configured to my http endpoint from solution 1:

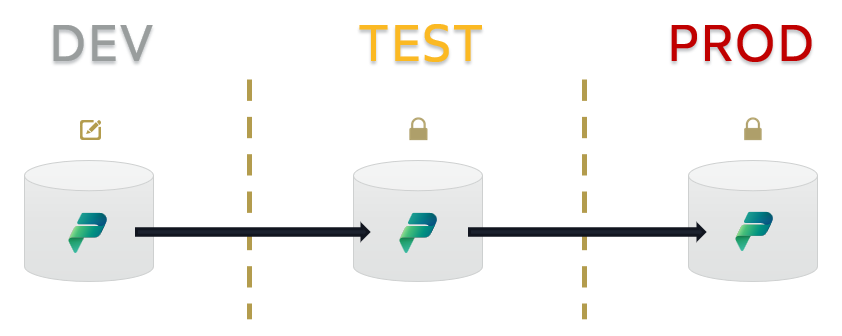

This works well, until I start to rollout my solution 1 from DEV, to TEST, and finally to PROD. Think about Application Lifecycle Management:

In addition, let’s imaging my solution 1 is replaced in TEST / PROD with an App Service or something else…

The bottom line is that I need to decouple the two solutions.

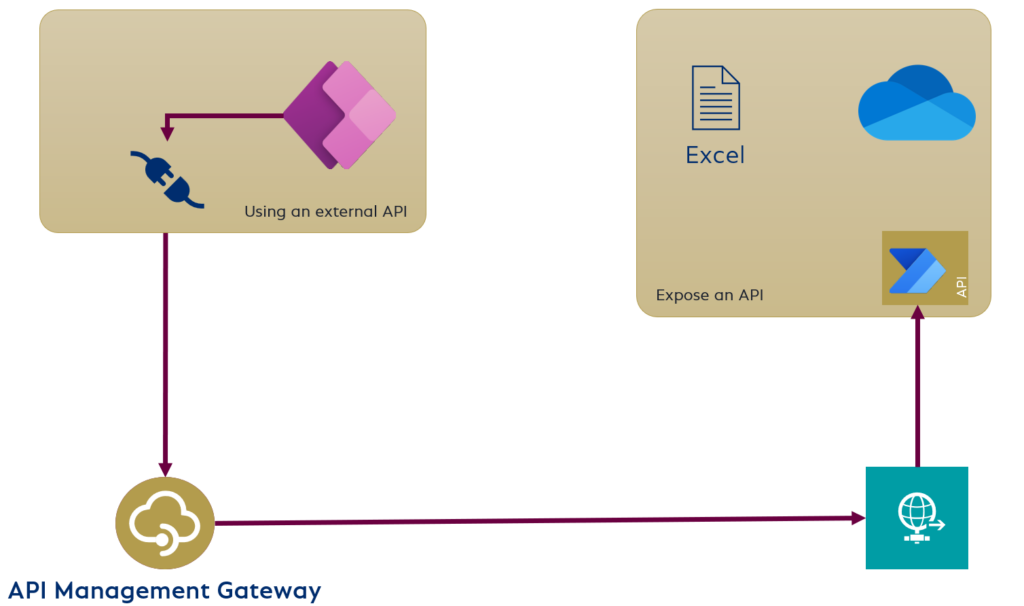

API Management Gateway

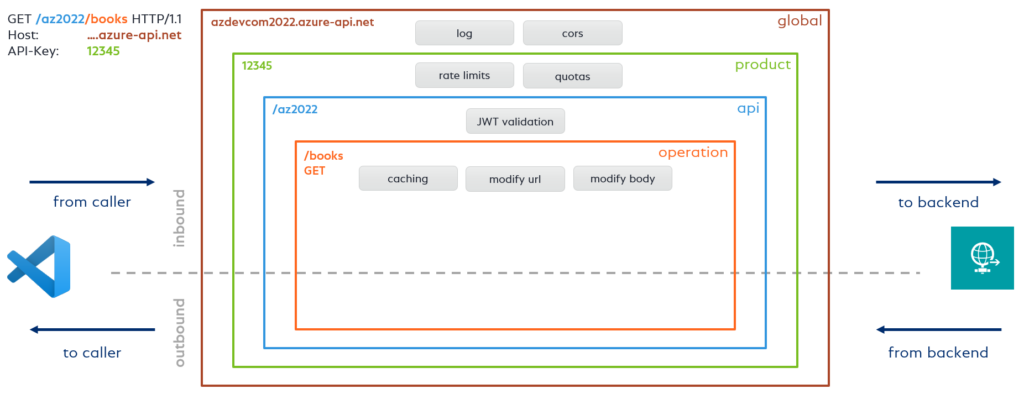

For that reason, I use a mediator between my API publisher and my API consumer. This is my API Management Gateway:

Technical, I deploy a consumption-based API Management Gateway in my Azure Portal.

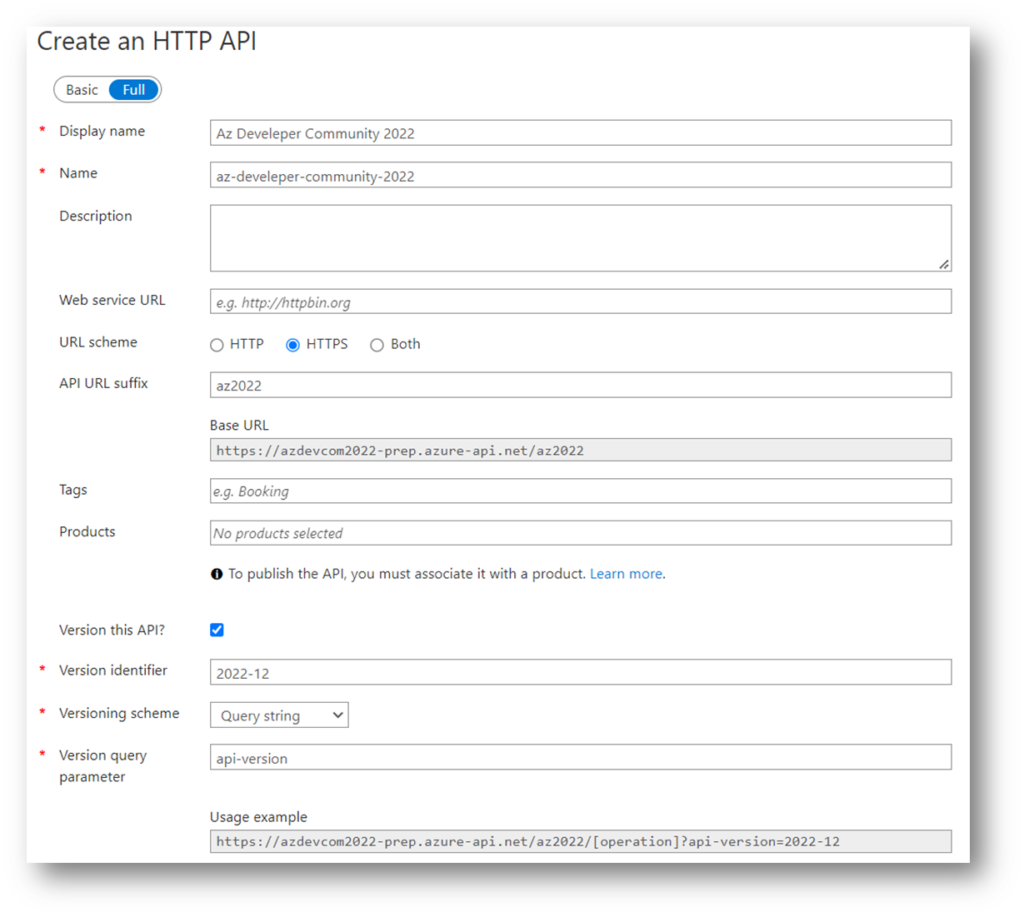

Creating an API

After provisioning, I define manually a new Http API. My API gets as suffix “az2022” and is versioned (“2012-12” as query string):

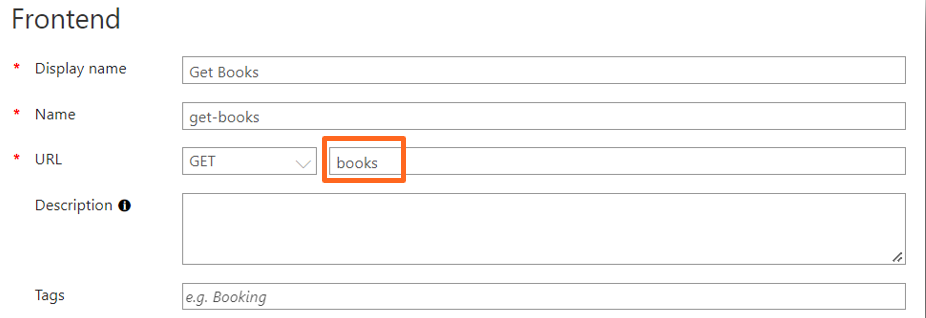

In addition, I create a new operation for my Power Automate Flow. I use “Get Books” as name for my operation, as method GET, and as API route “books” to read my books:

Well done. My whole setup would be this, when I add additional CRUD operation:

Policies

The next part is a little bit more tricky. This is because I need to route my API call to my Power Automate Flow trigger. Good news, API Management Gateway policies can change the behavior of an API through configuration:

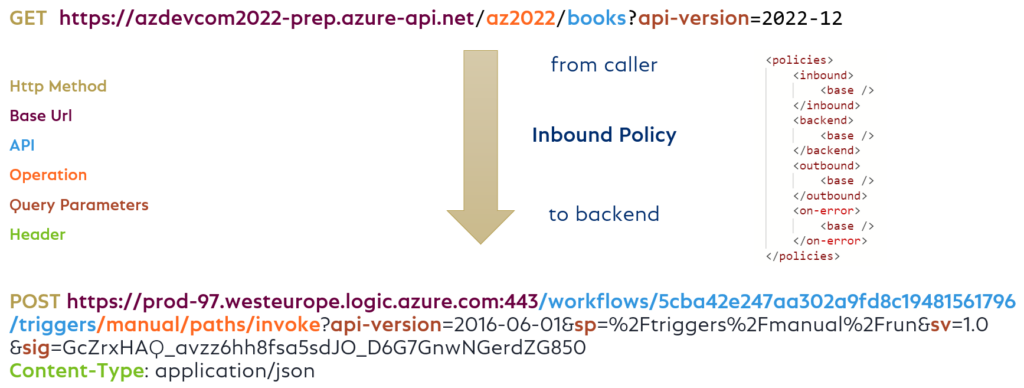

In detail I have to transform my incoming API call to my Power Automate Flow Http trigger by using an inbound policy:

The following example shows, what is available to change incoming Http-Request. I can modify my Base-URL, set a new Http-Method to “POST”, re-write my Uri, add the query parameters, and add “application/json” as “Content-Type” to my backend Http-Request:

Everything based on my Http-Trigger URL from Power Automate Flow. And there is much more…

Policy Fragments

Policy fragments help me to optimize my code. For example, I created this policy fragment “callFlow” in my API Management Gateway:

<fragment>

<!-- Assign base-url based on Power Automate Flow trigger URL -->

<set-backend-service base-url="@((string)context.Variables["baseUrl"])" />

<!-- Assign method based on Power Automate Flow trigger -->

<set-method >@((string)context.Variables["method"])</set-method>

<!-- Assign path based on Power Automate Flow trigger -->

<rewrite-uri template="/manual/paths/invoke/" />

<!-- Assign query parameters based on Power Automate Flow trigger URL -->

<set-query-parameter name="api-version" exists-action="override">

<value>2016-06-01</value>

</set-query-parameter>

<set-query-parameter name="sp" exists-action="override">

<value>/triggers/manual/run</value>

</set-query-parameter>

<set-query-parameter name="sv" exists-action="override">

<value>1.0</value>

</set-query-parameter>

<set-query-parameter name="sig" exists-action="override">

<value>@((string)context.Variables["sig"])</value>

</set-query-parameter>

<!-- Add Content-Type: application/json to request headers -->

<set-header name="Content-Type" exists-action="override">

<value>application/json</value>

</set-header>

</fragment>In addition I use variables in my operation policy, where I specify my Http-Trigger URL, my Http method and the value of the query parameter “sig”:

<policies>

<inbound>

<!-- My flow trigger URL details: -->

<set-variable name="flowUrl" value="https://prod-158.westeurope.logic.azure.com:443/workflows/4113...f5c0cef/triggers/manual/paths/invoke" />

<set-variable name="sig" value="q...hLumHEylNYw" />

<set-variable name="method" value="POST" />

<base />

<!-- Use the Policy-Fragment -->

<include-fragment fragment-id="callFlow" />

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>In detail, these are 4 lines of code for my operation policy. As result the included fragment contains the reusable policy snippet and modify my request to my Http trigger based on the variables.

Summary

First of all I will answer the question: “Does it make sense to setup an create an API based on Power Automate Flows?”… well, there is no clear answer, because it depends… It’s more about: When do you use this pattern?

In other words, I think it makes sense to decouple or reuse existing Power Platform solutions. Smaller solutions are much easier to handle than large solutions. I guess we will see this pattern more often in the future.

Secondly, we may not forget, that using an API management gateway offers us also the possibility to exchange the underlying service when needed. This means, we can replace a Power Automate Flow solution easily with alternatives (Logic App, App Service, Azure Function, …) without breaking the consumers.

Last but not least, small focused solutions are much easier to manage. Especially, when we talk about Application Lifecycle Management…