Transforming Data from a Blob Storage File

I recently had a Power Automate Flow challenge where I wanted to access and transform my data. Sounds like a simple task, but it took me a while to figure out the solution…

My data was generated by Azure Synapse and stored in an Azure Blob Storage. As you can see, each record is stored as JSON in a single row:

{"accountId": "4a09a875-268a-4d1f-9f3c-9965903e0132", "name": "..." }

{"accountId": "9e463db7-5f08-44d2-a641-06b6c8816d80", "name": "..." }

{"accountId": "8d1d379c-2a59-4b20-a2d3-3f4dbfe7bbb2", "name": "..." }Furthermore, all records are stored line by line in the whole file. Well, this shouldn’t be so hard to read and parse this content in an Power Automate Flow.

Azure Blob Storage

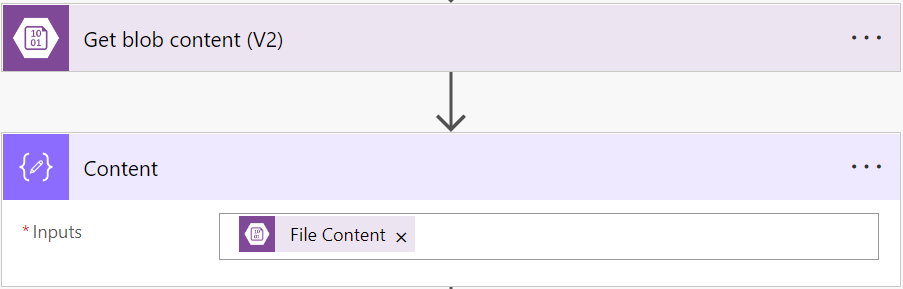

My first challenge was to read the content from my Azure Blob Storage file. For this I used a Data – Compose action and assign the content as input:

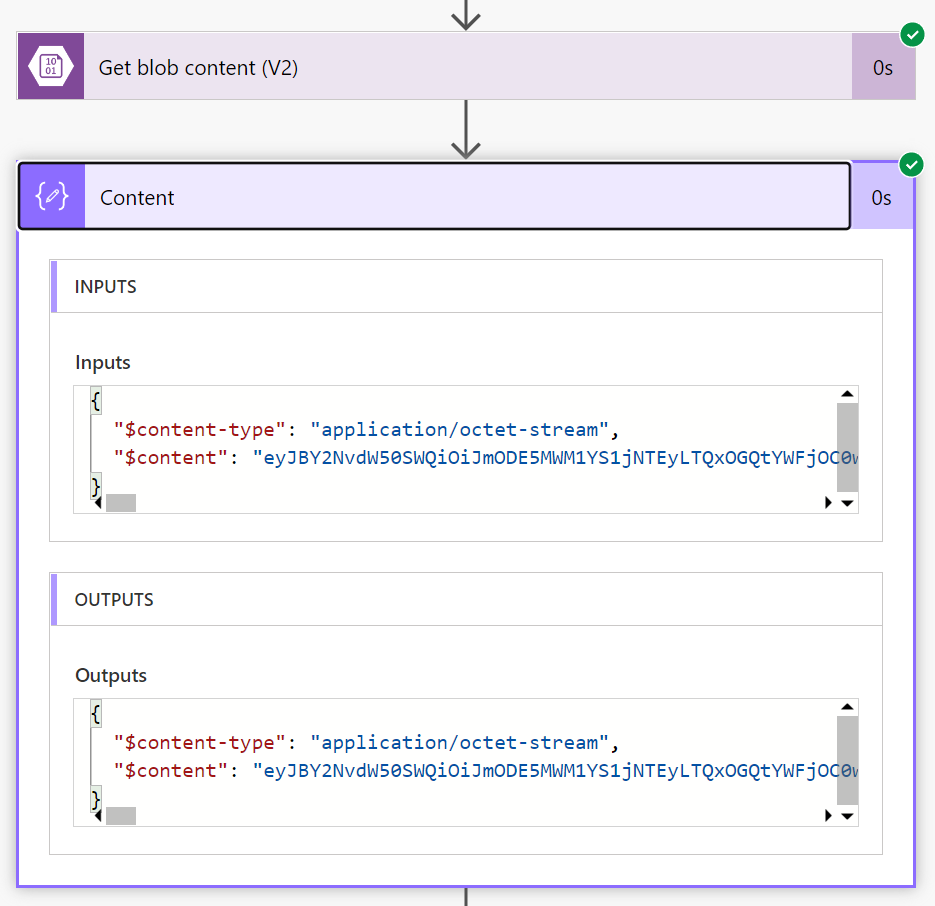

After running my Flow, I discovered I have a problem:

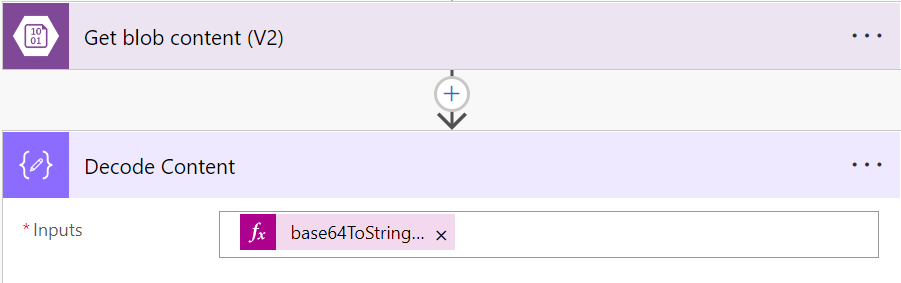

You see in this example, my data is firstly returned as Base64 encoded string. Secondly, my File Content contains this data as property “$content”. For that reason, I decoded the content:

My used expression is:

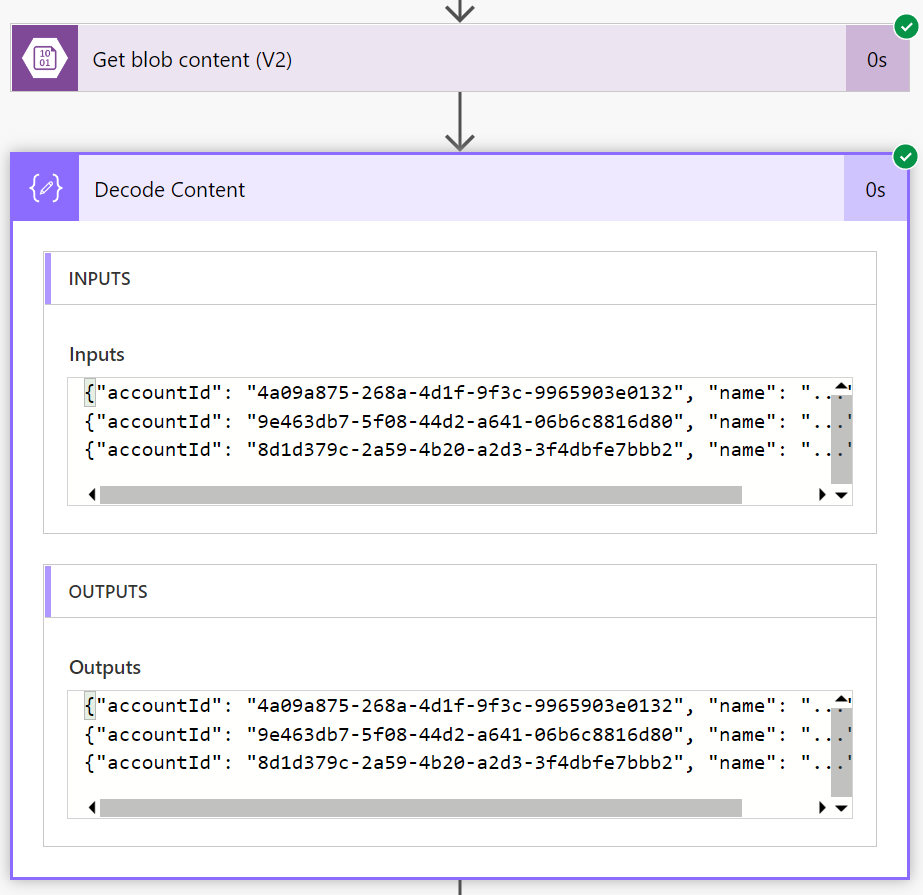

base64ToString(outputs('Get_blob_content_(V2)')?['body']?['$content']) After running my flow, you can see my result looks as expected:

Transform and Parse

Now it was time to parse my JSON content. For that I must transform my file content into a correct JSON array. But my raw data still looks like this:

{"accountId": "4a09a875-268a-4d1f-9f3c-9965903e0132", "name": "..." }\n

{"accountId": "9e463db7-5f08-44d2-a641-06b6c8816d80", "name": "..." }\n

{"accountId": "8d1d379c-2a59-4b20-a2d3-3f4dbfe7bbb2", "name": "..." }\n

\nYou see, firstly I must replace all “\n” by “,” except the last one. Secondly I have to add “[” at the beginning and “]” at the end of my string in order to get a correct JSON array like this:

[{"accountId": "4a09a875-268a-4d1f-9f3c-9965903e0132", "name": "..." }

,{"accountId": "9e463db7-5f08-44d2-a641-06b6c8816d80", "name": "..." }

,{"accountId": "8d1d379c-2a59-4b20-a2d3-3f4dbfe7bbb2", "name": "..." }

]Unfortunately, there is no RegEx action for Power Automate and working with “\n” (NewLine) is also a little bit cumbersome. However, with a little bit inspiration from my favorite user group, I came up with this solution:

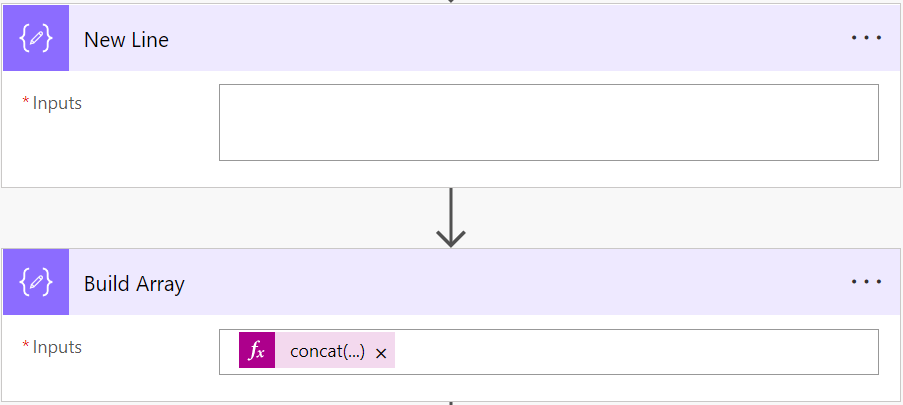

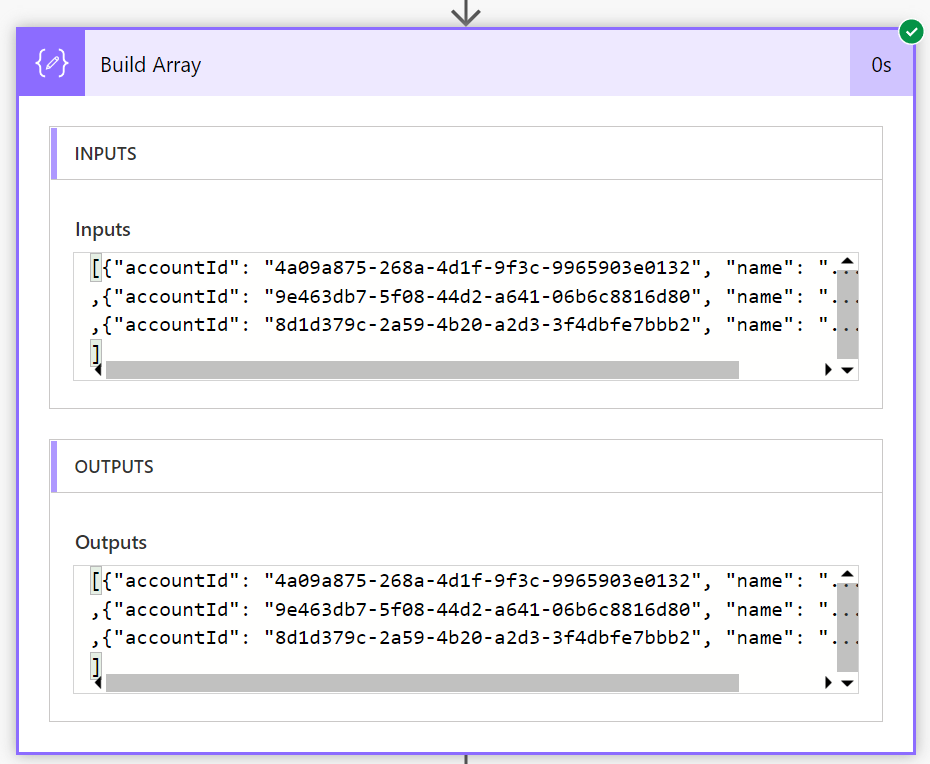

You see, I use two Data – Compose actions. First of all my “New Line” action is there to store an empty line as “\n“. Secondly I use another Data – Compose action “Build Array” to transform my content with the following expression:

concat('[', replace( take(outputs('Decode_Content'), add(length(outputs('Decode_Content')), -1)), outputs('New_Line'), ','), ']')Let me explain this a little bit. I use take(…) to get all characters except the last one, the “\n”:

take(outputs('Decode_Content'), add(length(outputs('Decode_Content')), -1))Additionally I use replace(…) to replace the “\n” by “,” and finally, I use concat(…) to surround my records with an array “[” and “]“.

The result of my Data – Compose action is now:

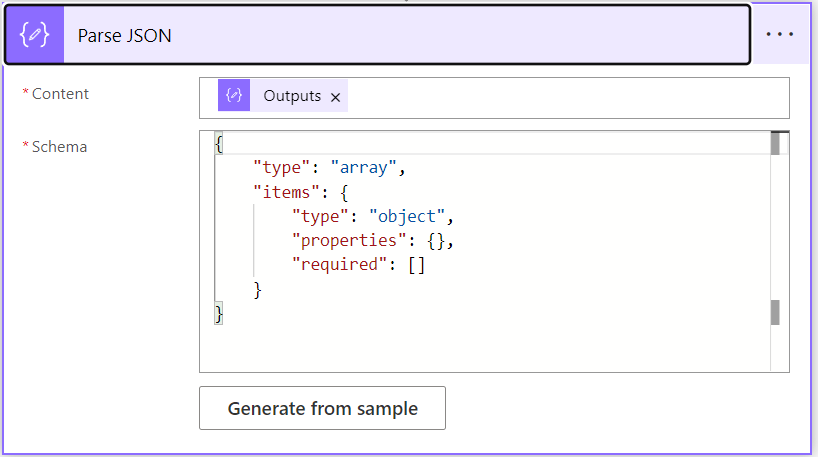

Last but not least, I use a Data – ParseJson action to parse my JSON array:

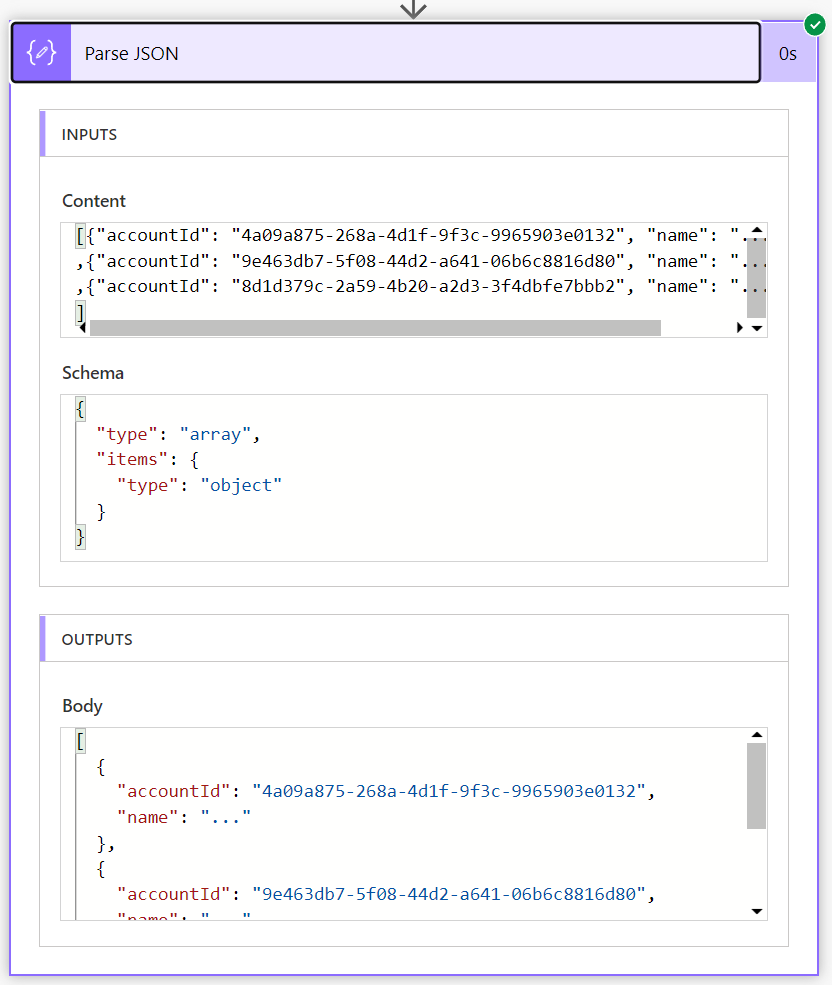

This operation works also as expected:

Summary

You have seen, I have used a lot of Data – Compose actions to store parameters and to transform my data.

First of all I discovered the secrets of my Azure Blob Storage content within a Data – Compose action. After this I stored parameters for my expression and transformed my string. Finally I used a Data – ParseJson action to convert the string into a JSON Array object.

Well, sometimes a simple solution is all what you need. But to be honest, it also takes time to find a simple solution.