Content Understanding from Power Platform

In one of my last blog posts I introduced my new friend AI Foundry Content Understanding to you. Here I have explained in detail how you can set up Microsoft’s new AI service offering for your documents. Furthermore, I showed you how I deployed my document analyzer in Azure. As result of this exercise, I got an endpoint that I can now use in one of my use cases. In other words, now I can start working with my custom AI Foundry Content Understanding service from Power Platform.

Before I start with Power Platform magic, I want to recap the important facts. Therefore, I’m starting with the API…

Understanding the Content Understanding Analyzer API

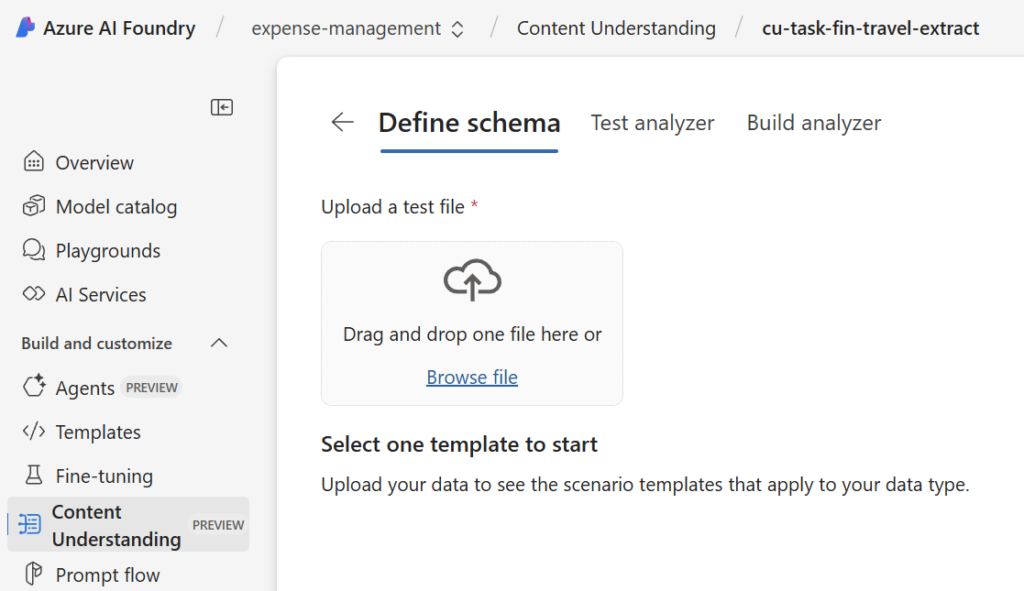

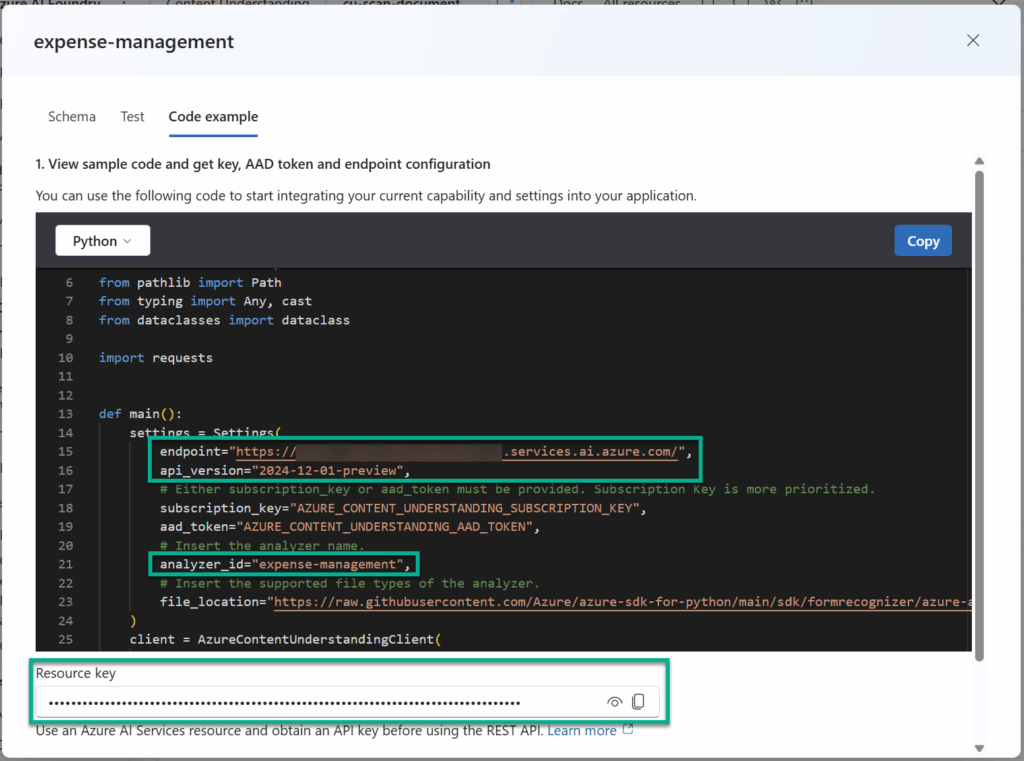

Remember, my deployed content understanding analyzer is hosted in Azure AI Foundry. This means I will find all relevant information there. Therefore, I’m navigating to my analyzer and opening the Code example:

From here I can extract my endpoint, analyzer_id, api_version, and Resource key. All this information is needed including a file_url to prepare the following API call:

@endpoint = https://YOUR_AI_FOUNDRY_AI_SERVICE_INSTANCE.services.ai.azure.com

@analyzer_id = YOUR_ANALYZER_ID

@key = YOUR_ANALYZER_KEY

@file_url = YOUR_FILE_URL

@api_version = 2024-12-01-preview

### Analyze Document ###

POST {{endpoint}}/contentunderstanding/analyzers/{{analyzer_id}}:analyze?api-version={{api_version}}

Ocp-Apim-Subscription-Key: {{key}}

Content-Type: application/json

{

"url": "{{file_url}}"

}As result, I will get as response the information about the started operation including the id to retrieve my result:

{

"id": "704b1dc3-65b3-4c96-9358-94a59ba52a81",

"status": "Running",

"result": {

"analyzerId": "02",

"apiVersion": "2024-12-01-preview",

"createdAt": "2025-05-23T06:03:40Z",

"warnings": [],

"contents": []

}

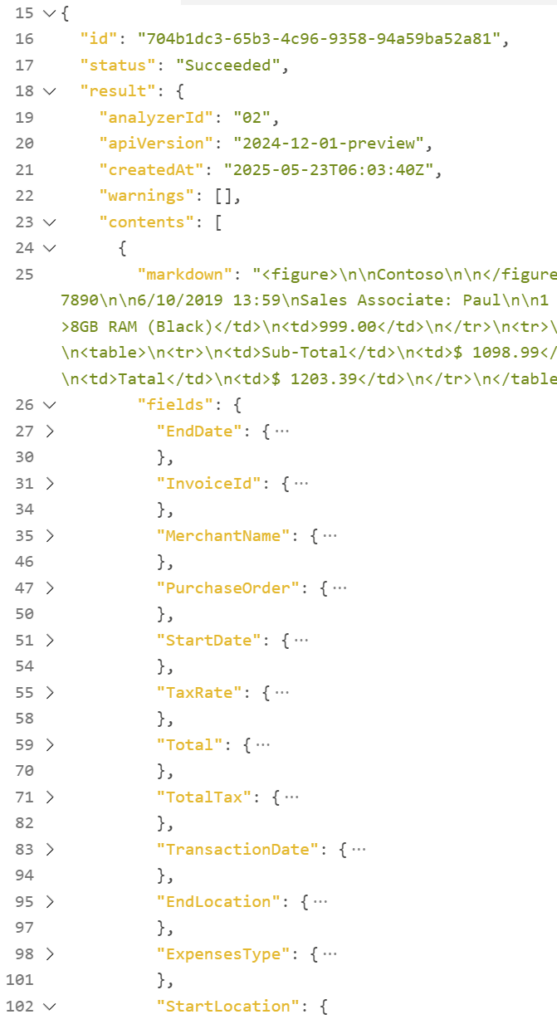

}Afterwards, I can use the returned id in my next call. Here I’m requesting my analyzer’s results:

@result_id = 704b1dc3-65b3-4c96-9358-94a59ba52a81

### Get Analyzer Result

GET {{endpoint}}/contentunderstanding/analyzers/{{analyzer_id}}/results/{{result_id}}?api-version={{api_version}}

Ocp-Apim-Subscription-Key: {{key}}When the operation is completed, I get this:

This is straightforward. But what’s with my parameter file_url?

Get File Content

The file_url parameter itself must be an URL where AI Foundry Content Understanding can download the file. In other words, my analyzer will use this URL with a HTTP GET request to download the file content.

Here is an example, what my analyzer expects for its call:

@file_url = https://...my-url...?...some-parameters

### Request

GET {{file_url}}

### Response

HTTP/1.1 200 OK

Content-Length: 86380

Content-Type: application/octet-stream

... the binary file content ...You see, my Request contains no header information. This is because my analyzer doesn’t know what to provide here. Furthermore, my analyzer expects a response to the raw file content including the content type.

In other words, I must create an endpoint that delivers my file content. Here I will utilize today Power Automate.

Get File Content from Power Automate

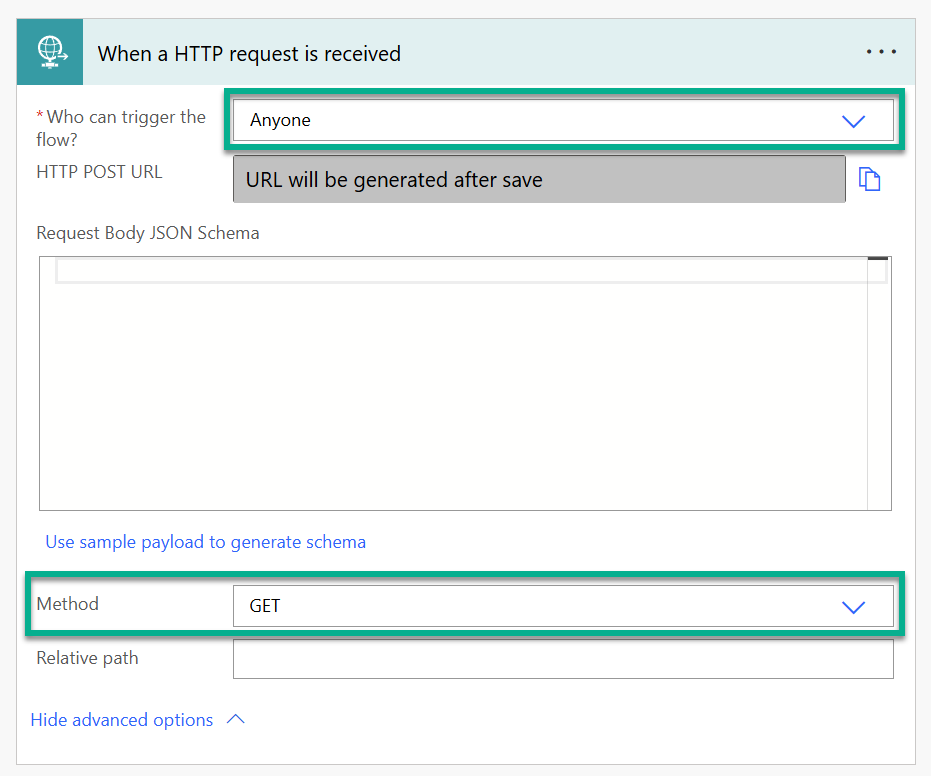

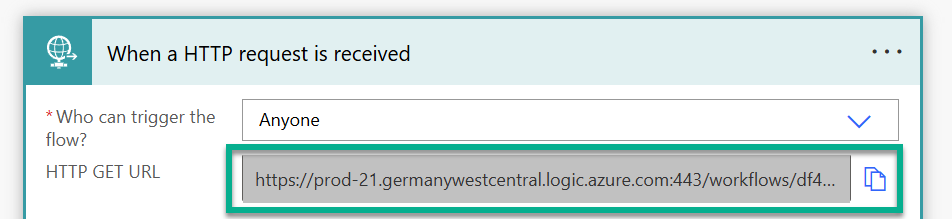

In Power Automate I set up first a new flow. Here, I use When a HTTP Request is received as trigger for my flow. Moreover, I’m configuring the parameter Who can trigger my flow to Anyone and I’m using as Method GET:

It is important to allow anyone to trigger my flow because I need a pre-signed public URL.

Note: In enterprise scenarios there are better solutions to make this more secure.

After saving my flow I can pick my pre-signed URL here:

As you see, my URL contains already query parameters:

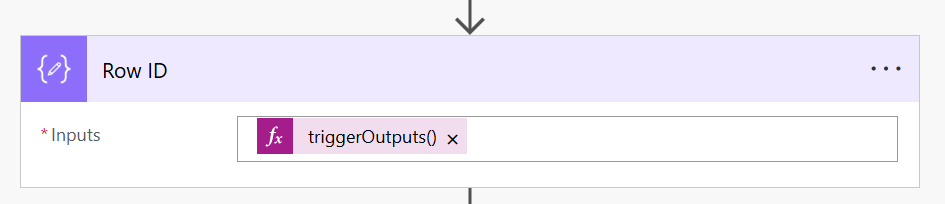

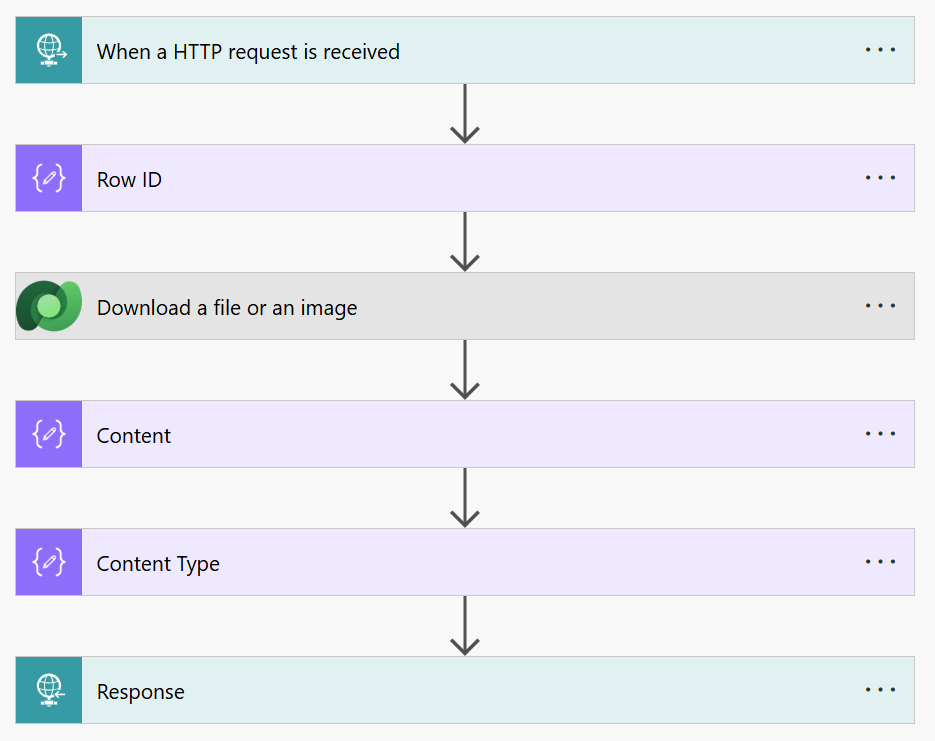

https://prod-21.germanywestcentral.logic.azure.com:443/workflows/d14999a75924347c4b739e4cc5b4334e4/triggers/manual/paths/invoke?api-version=2016-06-01&sp=%2Ftriggers%2Fmanual%2Frun&sv=1.0&sig=pZyk7...JHMHere I can extend the URL with my own parameter id. In detail, I will add here the record id of my Dataverse table Content Understanding Documents. Therefore, I’m adding a Compose action to extract the record id from my flow triggerOutputs:

My used expression is to extract the record id (id) from query parameters:

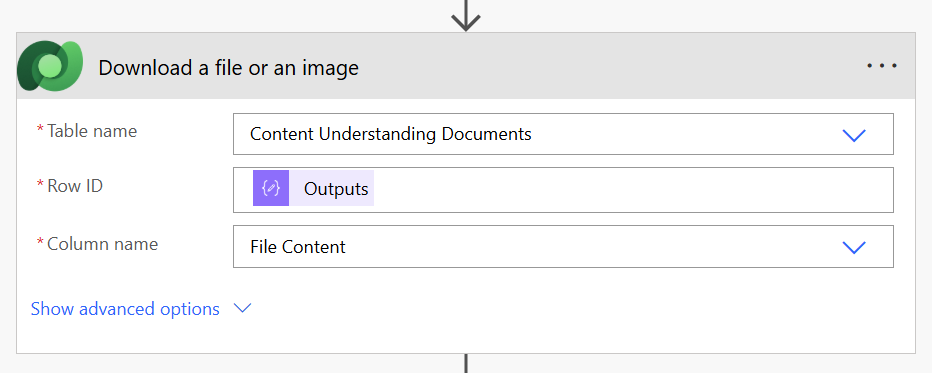

triggerOutputs()?['queries']?['id']Within this I can now access my table Content Understanding Documents. Now I’m using the action Download a file or an image (see more here). In addition, I’m configuring File Content as Column name:

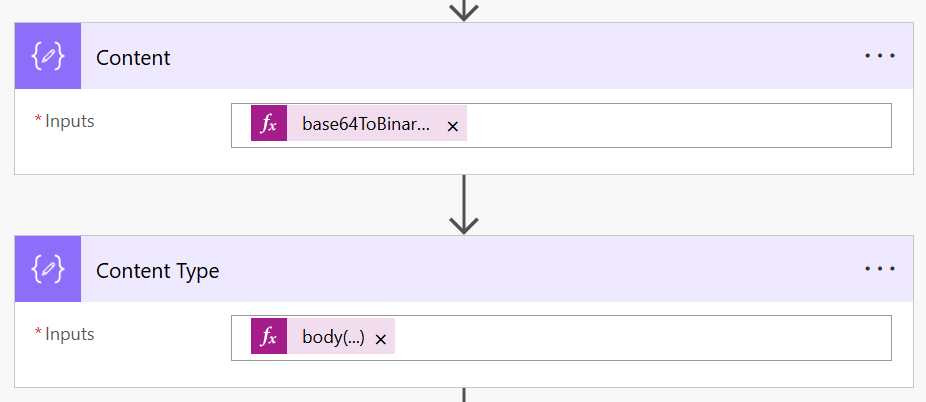

Afterwards, I must extract the content and the content type from the output of this action. I’m using again with two Compose actions:

The code is of my two Compose actions is:

# Content:

base64ToBinary(body('Download_a_file_or_an_image')?['$content'])

# Content-Type:

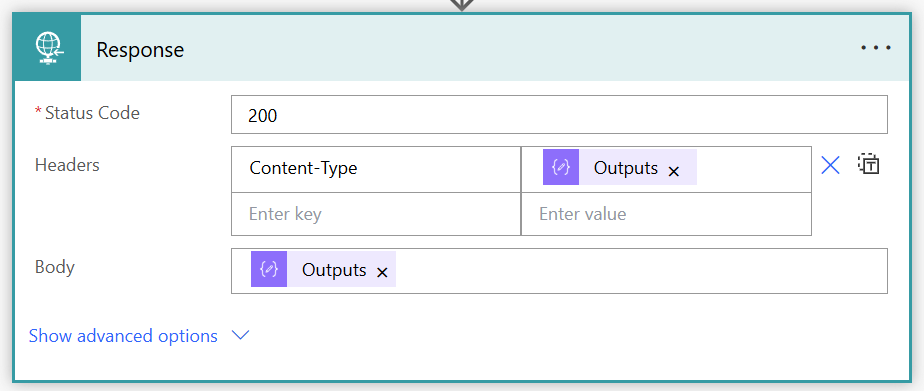

body('Download_a_file_or_an_image')?['$content-type']Finally, I’m sending back the content type as Header information and the document content as Body of a Response action:

That’s all. With these steps I have created a flow that is available as an API endpoint. Furthermore, this flow takes the record id of my table Content Understanding Documents as query parameter id. With that, I’m downloading the stored file and returning the content to the caller.

Document Processing Workflow

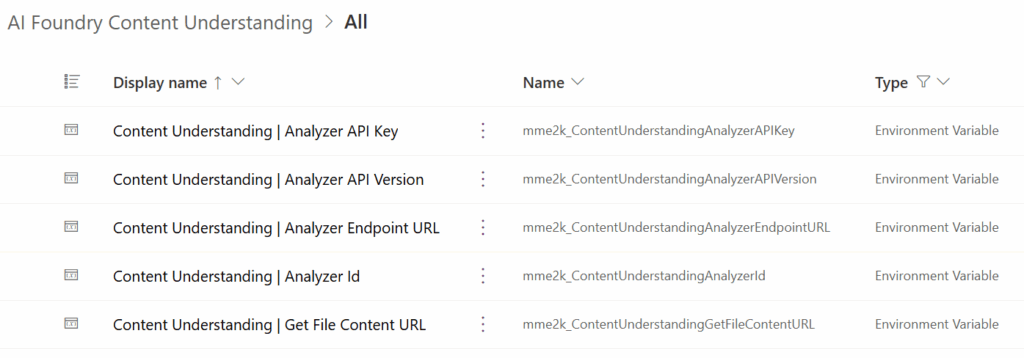

Now it is time to set up my document processing workflow in Dataverse. As a prerequisite I’m creating and configuring in my solution these environment variables:

In detail, these environment variables first contain the information of my deployed Azure AI Content Understanding Analyzer. Secondly, I store here the trigger URL from my Power Automate flow Get File Content.

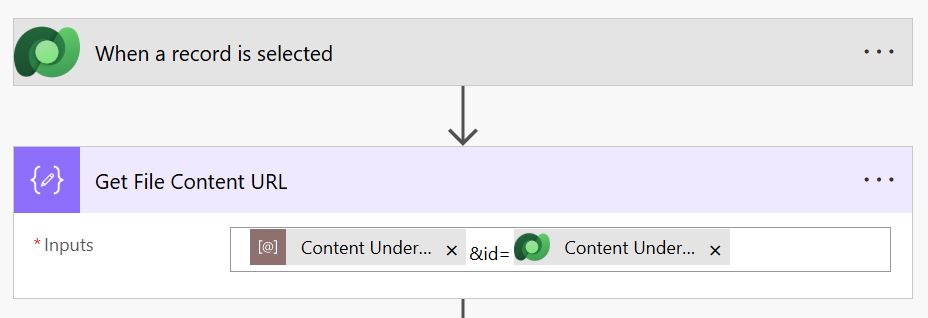

After my environment variables are ready, I’m creating a new Power Automate flow Process Document. Here I’m using as trigger When a record is selected to keep my demo simple. I do this because within this trigger I’m getting the current record id as a parameter.

Next, I’m adding an action to compose the URL for my AI Foundry Content Understanding analyzer:

Here I’m adding first to the URL from my Power Automate flow Get File Content, stored as environment variable. Furthermore, I’m adding parameter id within the current record id from my trigger.

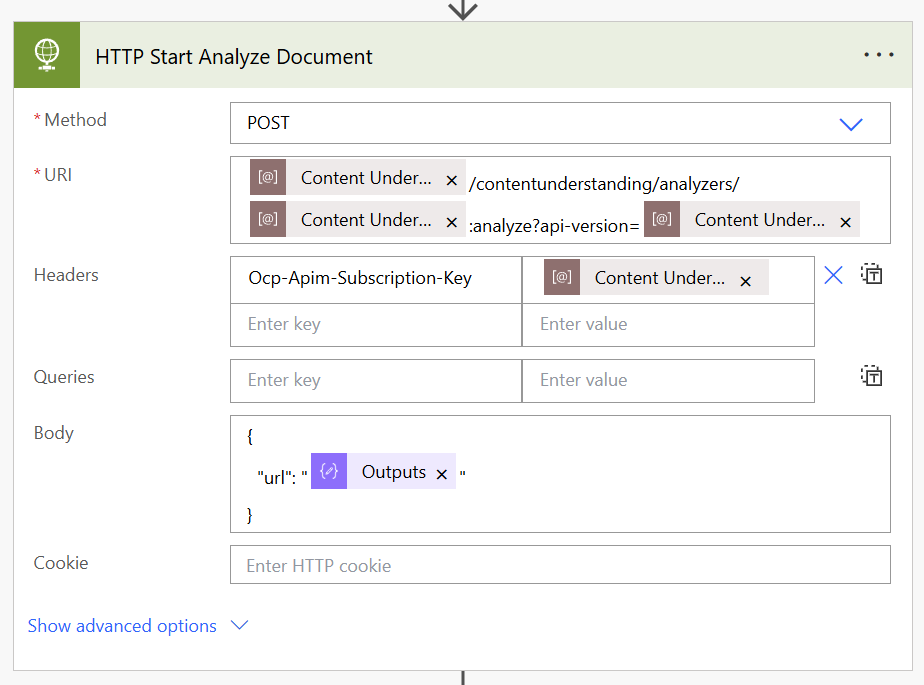

After this is finished, I add an HTTP Request action. Moreover, I’m configuring my action HTTP Start Analyze Document with all the needed parameters. I’m using POST as Method and build the URI based on my environment variables:

You see also that I added Ocp-Apim-Subscription-Key as a Header of this request. This is because I must provide an authentication for my analyzer. Furthermore, at the Body I’m using as url the output of my compose action Get File Content URL.

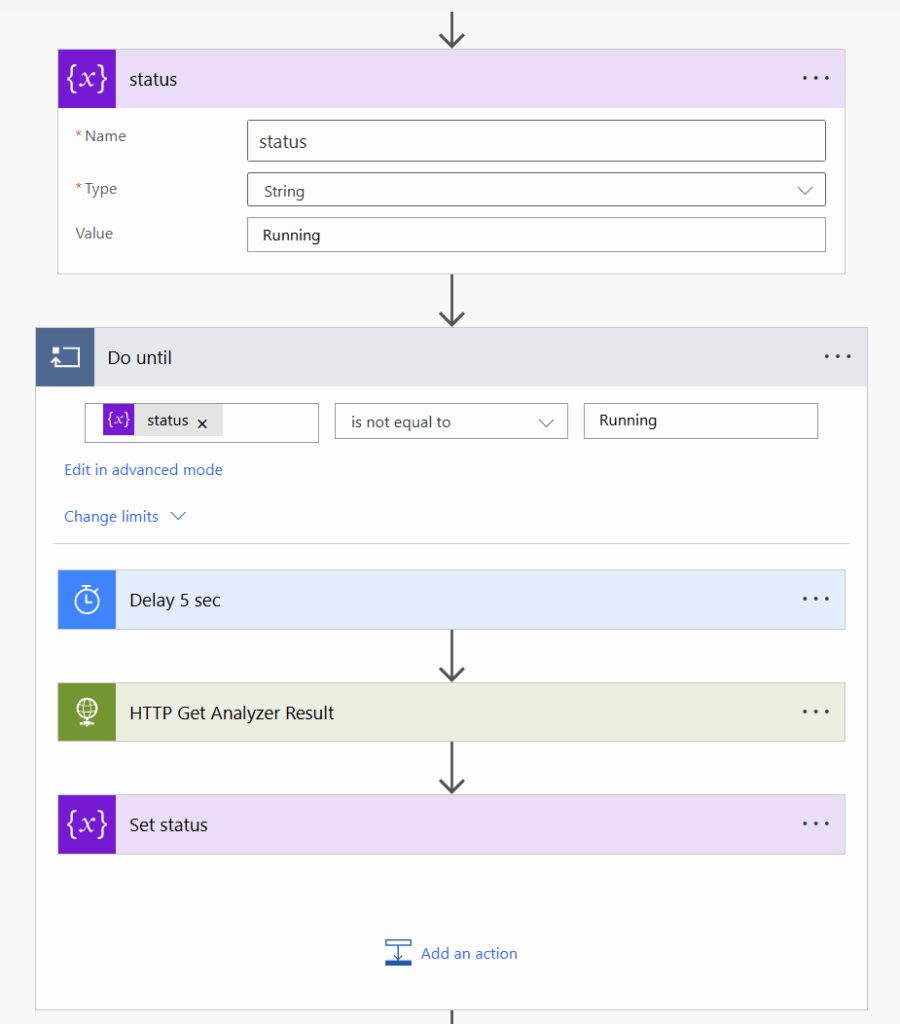

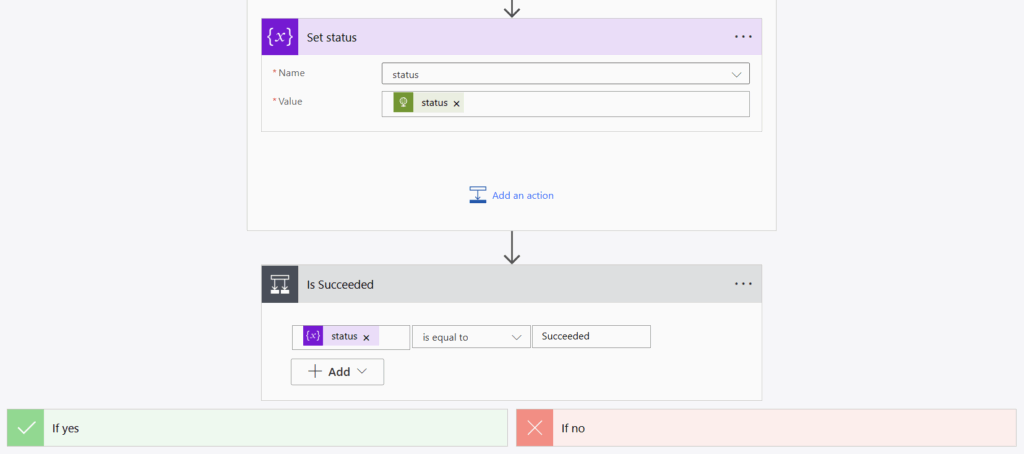

I know that this API call will start the operation. Therefore, I must wait until my operation is no longer Running. I do this with a variable status and a Do until loop. In addition, I’m using a 5 second delay to wait before I try to retrieve the result:

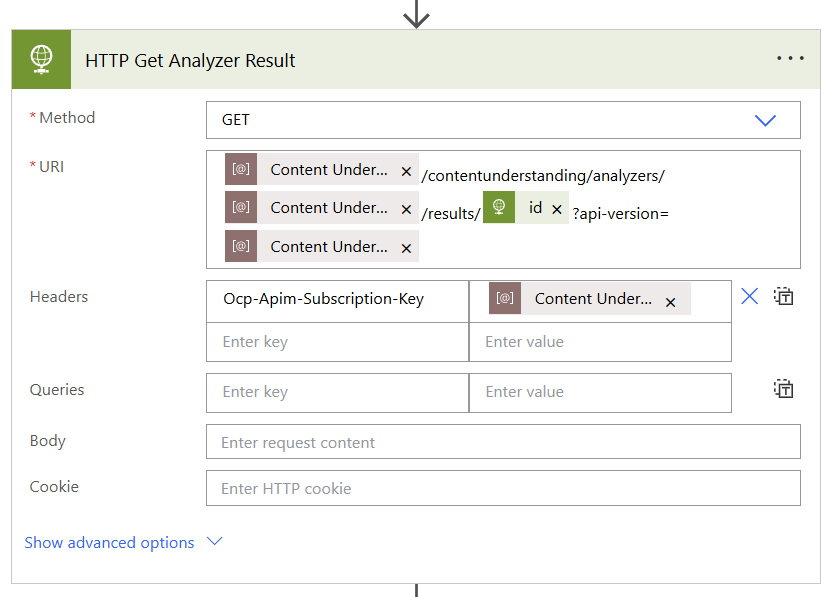

My action HTTP Get Analyzer Result is also a HTTP Request action. Here I’m using the returned result id from the Start Analyze Document call as parameter in URI:

This call response will return the new operation status. If the status is no longer Running, my Do Until loop stops. Now I can check the resulting status weather it is Succeeded or not to use my results:

Perfect, my second flow is ready!

Testing my Solution

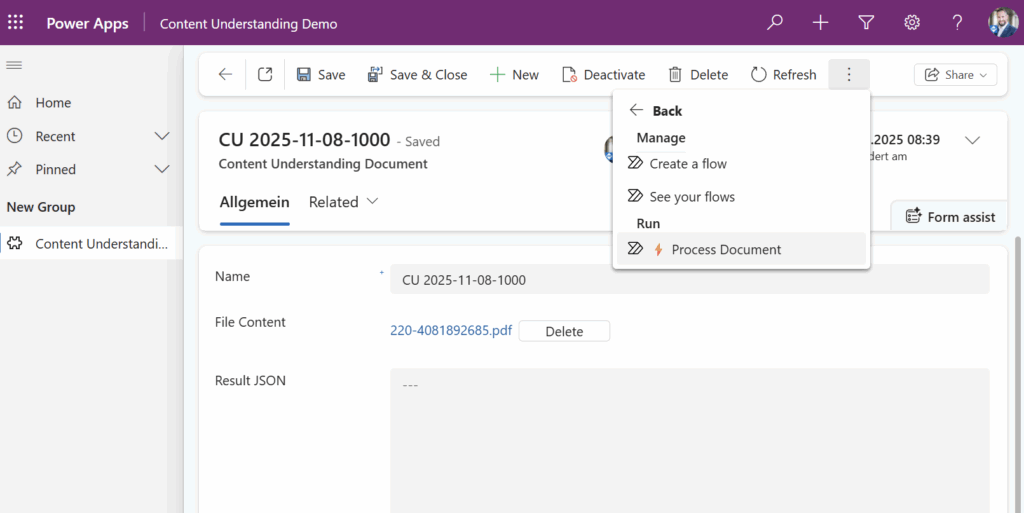

For testing I created a small Model-driven App in my Dataverse solution. Here I can trigger directly my Power Automate Flow Process Document from the user interface:

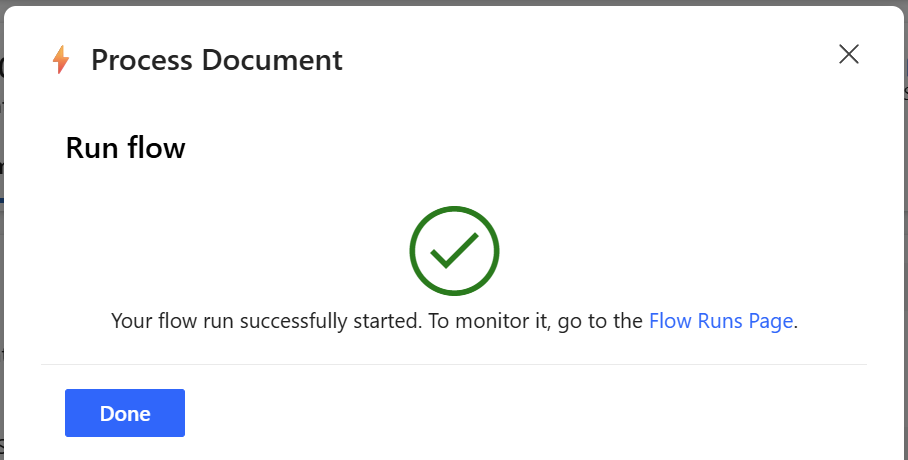

Now my flow is running:

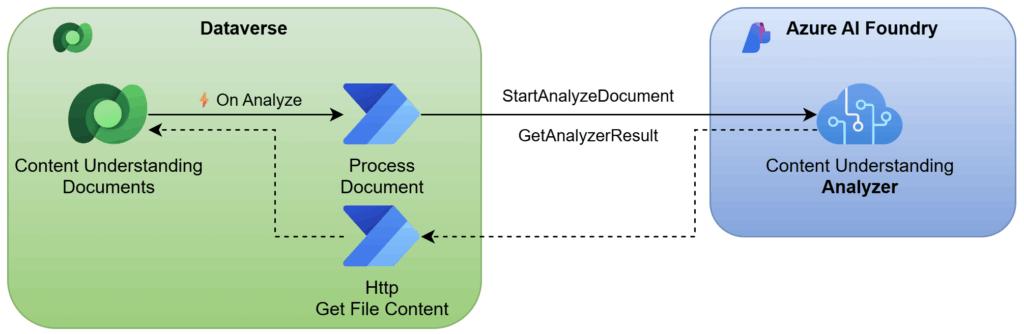

Behind the scenes, my Power Automate flow Process Document is calling now my Azure AI Content Understanding analyzer. Here my flow Process Document is using the trigger URL of flow Get File Content together with the record id as additional parameter in the StartAnalyzeDocument call:

My AI Foundry analyzer will use this URL to download the file content stored in Dataverse table Content Understanding Documents. When this is done, Content Understanding is analyzing my document and extracting my defined information.

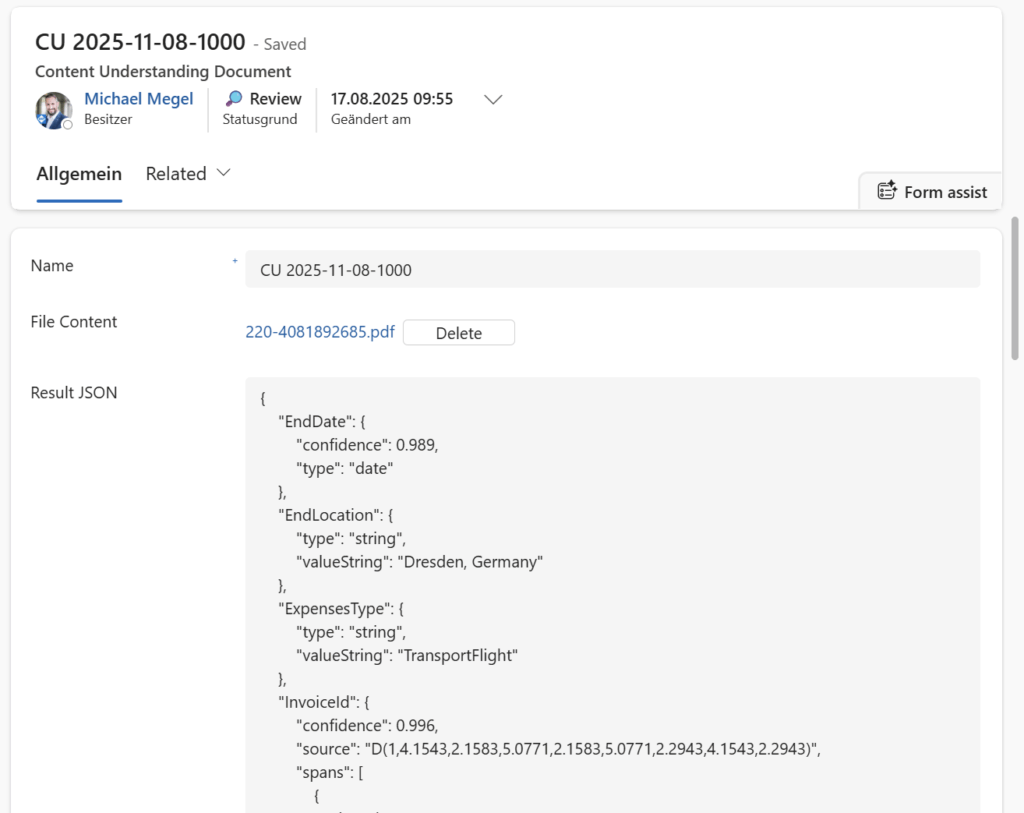

In the meanwhile, my flow Process Document is polling GetAnalyzerResult in its Do Until loop. When the status result is Succeeded from GetAnalyzerResult, my flow writes the extracted fields back into column Result JSON.

Some seconds later, my document contains the extracted information in my Model-driven App:

As you see, I used successfully AI Foundry Content Understanding from Power Platform!

Summary

AI Foundry Content Understanding is a valuable service when used with the Power Platform. In this blog post, I demonstrate how Citizen Developers can leverage this service. I started with an overview of the necessary Content Understanding analyzer API calls. Moreover, I emphasized that the analyzer needs to download the file content.

Within these requirements I explained first how I built a Power Automate Flow to download the file content from Dataverse. In detail, I have created a public API endpoint. Based on this, I created a second flow to implement the document processing workflow. Here I generated first an URL to download the specific file content. Then I passed this URL to my analyzer and started the content extraction process. Afterwards, I pulled the result in a loop.

Finally, I tested the whole process within a demo Model-driven App. Here, I attach files per example an invoice to Dataverse table record. I triggered my processing flow and waited until the automation process finished. Afterwards, the extracted data from my Content Understanding analyzer appeared in my Dataverse record. Now you can imagine the potential of the combination between Content Understanding and Power Platform.