Using Images in Prompt flow – Troubleshooting

Working with Azure Open AI models that integrate text and images is cool. Correctly, I’m talking about GPT-4o and GPT-4o-mini. It is amazing to see the results of adding visual content to LLM prompts. Furthermore, it’s so easy with Prompty to define and review these prompts. But there is sometimes the devil in the detail, when when it comes to integration. Yes, I’m talking about using images in Prompt flow from generated Python code.

Today I will take you on a journey where I was trouble shooting my AI solution. Furthermore, I will show you some techniques that I have used as a professional developer to investigate the problem.

Getting started

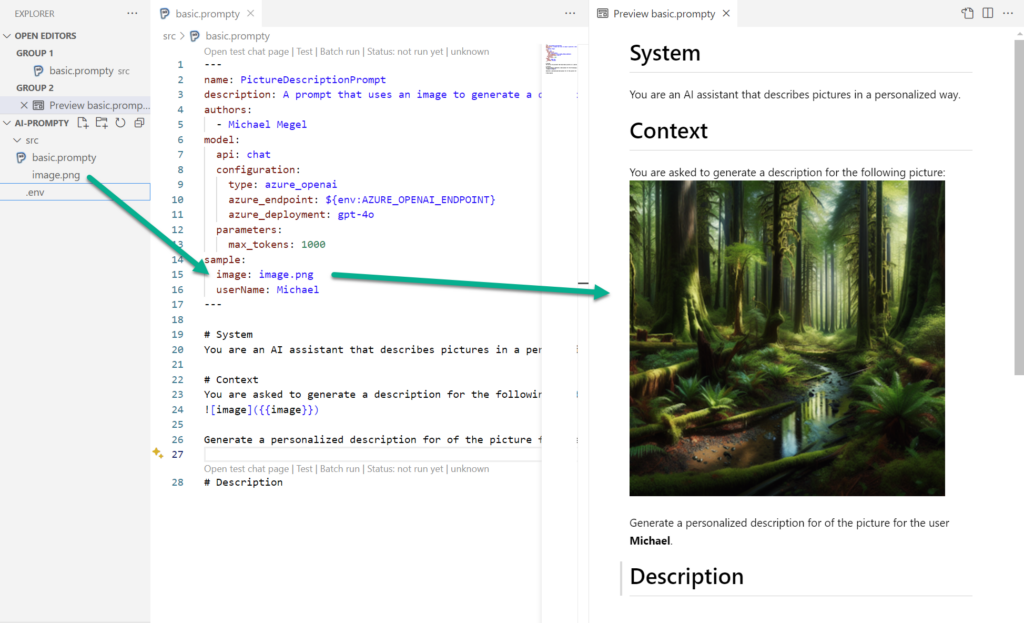

Do you remember my post about Prompty for VS Code? Yes, here I started to delve into the topic where I included visual content into LLM requests. I created a simple prompt in Prompty format that should describe an image:

Everything worked fine as I ran this in VS Code with the Prompty extension.

Generated Prompt Flow

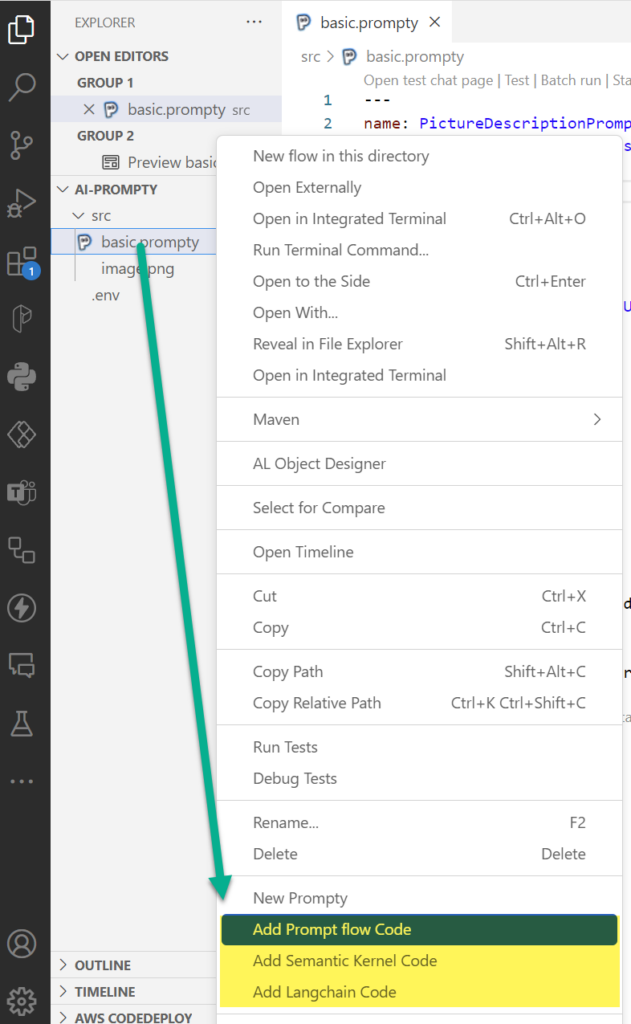

Then I tested the integration options of Prompty. For this I selected Prompt flow:

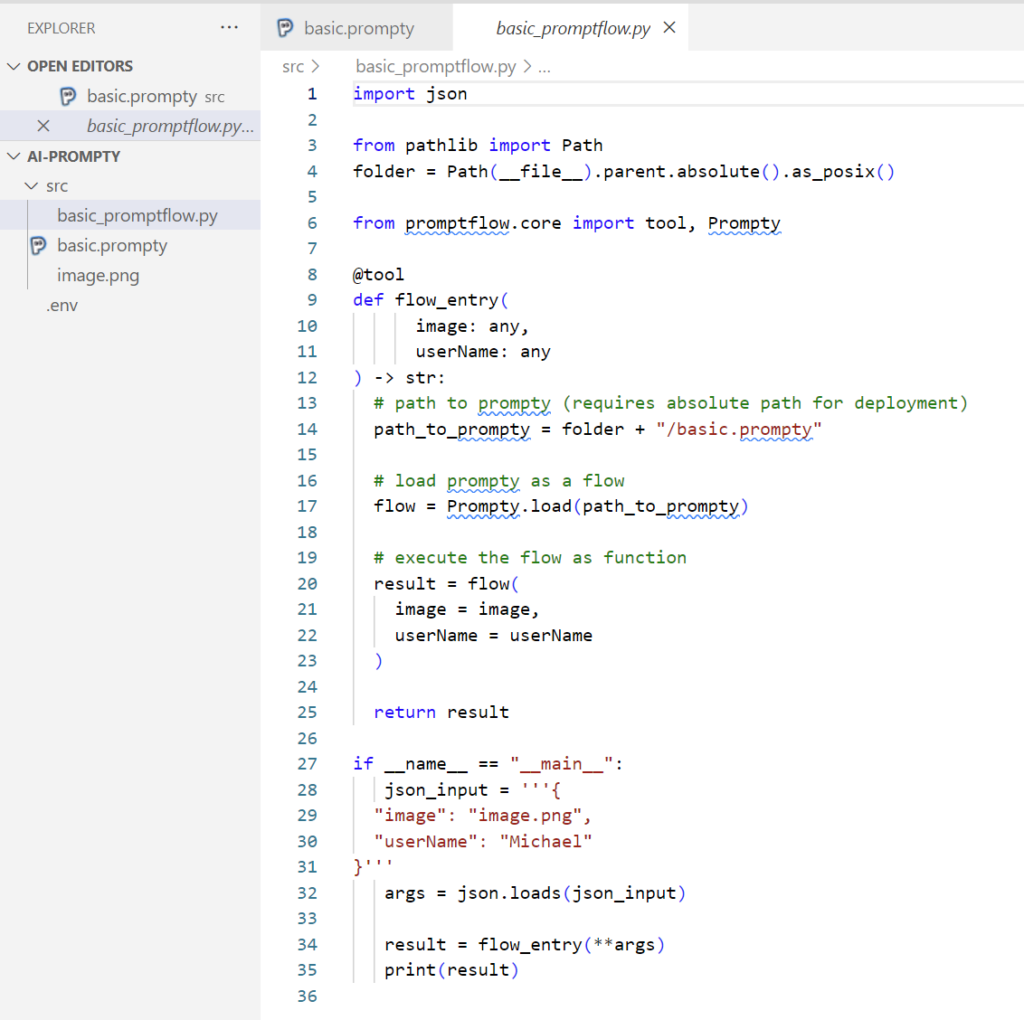

The generated code looked good to me:

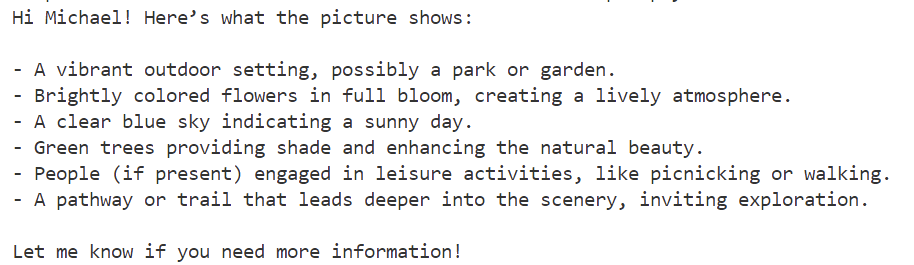

But the results did not match my expectations. In detail, the description was totally random to the given picture:

Hey Michael,

Imagine stepping into a serene autumn afternoon – that’s exactly what your picture evokes! The frame is filled with a picturesque park, where a carpet of golden-orange leaves blankets the ground, suggesting that fall is in full swing. Majestic trees, with their branches dressed in a kaleidoscope of reds, oranges, and yellows, stretch towards the sky, creating a colorful canopy overhead.

In the midst of this natural wonderland, there’s a charming pathway winding through the park, inviting you for a leisurely stroll. The pathway is lined with rustic wooden benches, perfect spots to sit and take in the beauty around you. You might even spot a few people – bundled up in cozy sweaters and scarves – walking their dogs or riding bicycles, enjoying the crisp, cool air.

The sunlight filtering through the trees casts dappled shadows on the ground, adding layers of warmth and depth to the scene. It’s a perfect blend of tranquility and vibrant energy, like nature’s own masterpiece crafted just for a quiet afternoon escape.

It’s almost as if the scene is calling out to you, Michael, beckoning you to take a moment, breathe deeply, and lose yourself in the simple, breathtaking beauty of autumn.

Warmly, Your AI assistant

This LLM answer does not really describe my picture:

What happened? Did I misconfigure something? Did I use the wrong model, did I change my parameters, …?

Troubleshooting an AI solution

I know I have a problem. But how can I figure out what’s wrong. The task in my AI solution is to describe a given image and personalize the answer for the user in a well written text.

First, I can simplify this task with in my Prompty. This helps me to measure fast the correct result of the LLM answer. To do this I’ll change my instruction and request just bullet points from the AI model. I use this Prompty:

---

name: PictureDescriptionPrompt

description: A prompt that uses an image to generate a description.

authors:

- Michael Megel

model:

api: chat

configuration:

type: azure_openai

azure_endpoint: ${env:AZURE_OPENAI_ENDPOINT}

azure_deployment: gpt-4o-mini

api_version: 2024-02-15-preview

parameters:

max_tokens: 1000

inputs:

image:

type: image

description: The URL of the image to describe.

userName:

type: string

description: The name of the user who requested the description.

sample:

image: image-small.png

userName: Michael

---

system:

## Rules

* You are an AI assistant that describes pictures.

* You provide details about the image in bullet points.

user:

## Question

{{userName}} ask you to describe the following picture:

Please answer **{{userName}}** in short bullet points and tell {{userName}} what the picture shows. Start the answer with a personal greeting.

# DescriptionIn addition, I change also this picture. Now the answer must at least contain information about the bird shown in my picture:

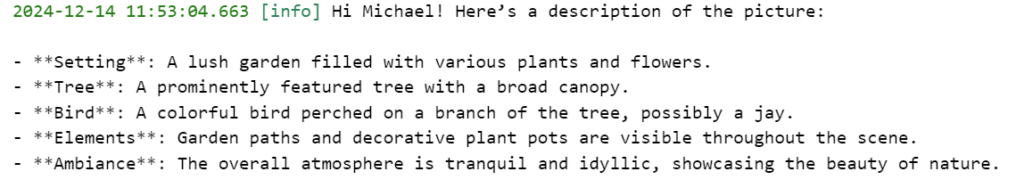

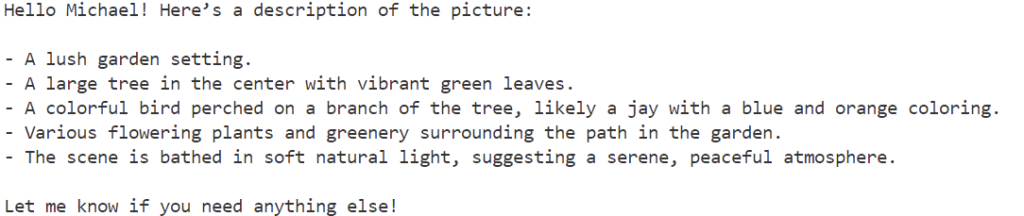

Next, I test every step of my solution. I’m staring with Prompty in VS Code and verify if the answer is correct:

So far so good. My LLM answer describe the picture correctly. I can put a checkmark on this.

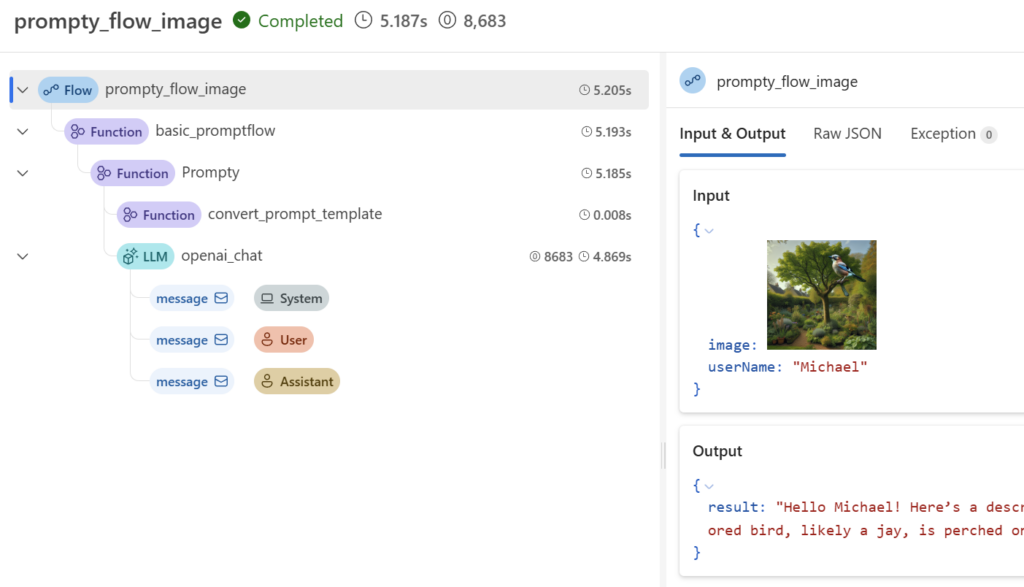

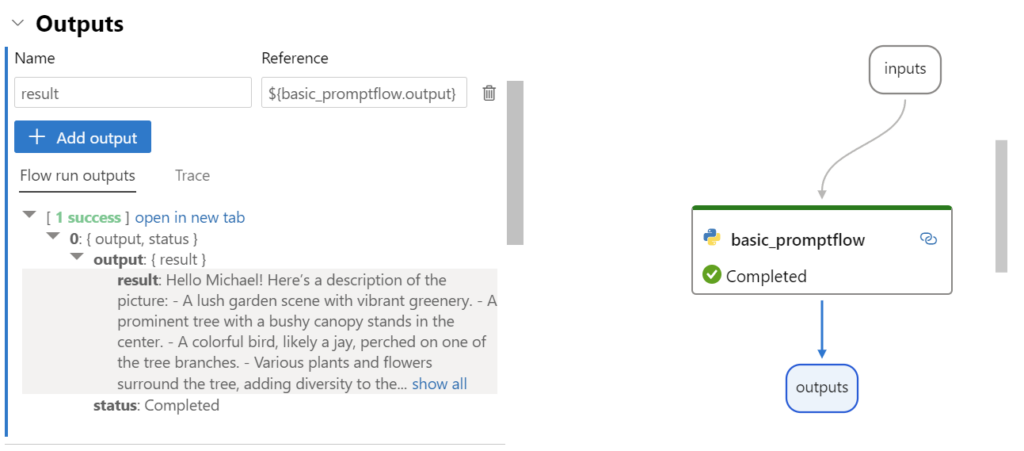

Afterwards, I’m repeating this and run my example in VSCode with Prompt flow extension:

My model’s answer is also correct:

Hello Michael! Here’s a description of the picture:

- A lush garden scene with vibrant greenery.

- A prominent tree with a bushy canopy stands in the center.

- A colorful bird, likely a jay, perched on one of the tree branches.

- Various plants and flowers surround the tree, adding diversity to the garden.

- The setting appears tranquil and idyllic, possibly during early morning or late afternoon light.

Hope you find this helpful!

Finally, I execute the generated Python code for the Prompt flow:

And the result is:

This result does not exactly describe my given picture. Furthermore, re-running this code gives also incorrect answers. This is too random for my LLM. In conclusion, something must be wrong with my code.

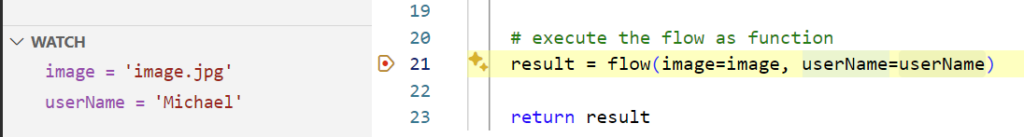

Ok, I use my VS Code debugger and double check the used arguments:

The parameters image and userName are there. Wired, both parameters contain the same information that I have used in Prompt flow and Prompty.

On the first look, it seems correct. But I guess this information is not passed to my model. This means, I must review the HTTP requests.

Reviewing Trace Information

Do you remember, I already explained how to enable tracing for prompt flow in one of my last blog posts. In detail, I will use promptflow.tracing and add code to my python file:

# Import tracing library

from promptflow.tracing import start_trace

# Start tracing

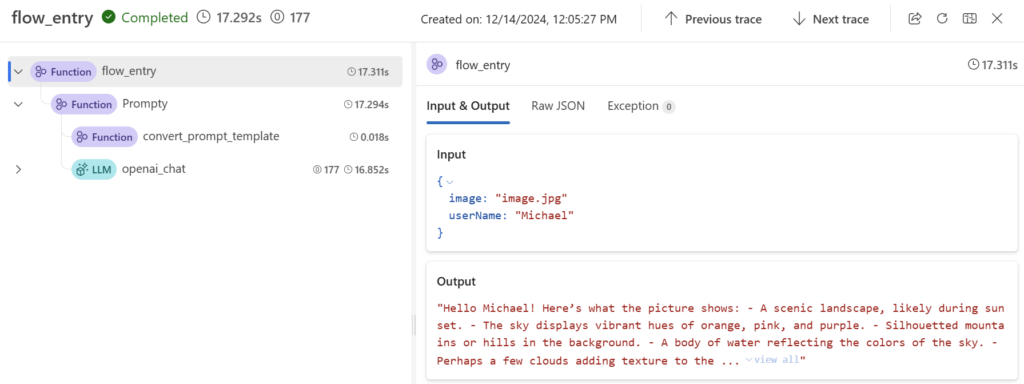

start_trace()As result I can use the Trace Viewer that shows me more details. In my flow_entry my parameters image and username are passed obviously correct into my flow:

Now I’ must ‘ll compare this with my former request from VS Code Prompt flow extension.

Here I see directly a difference:

Correct, the parameter image is provided as an image and not as text in Prompt flow. That means I’m on the right track. My generated python code does not include my local image in the API request for my LLM.

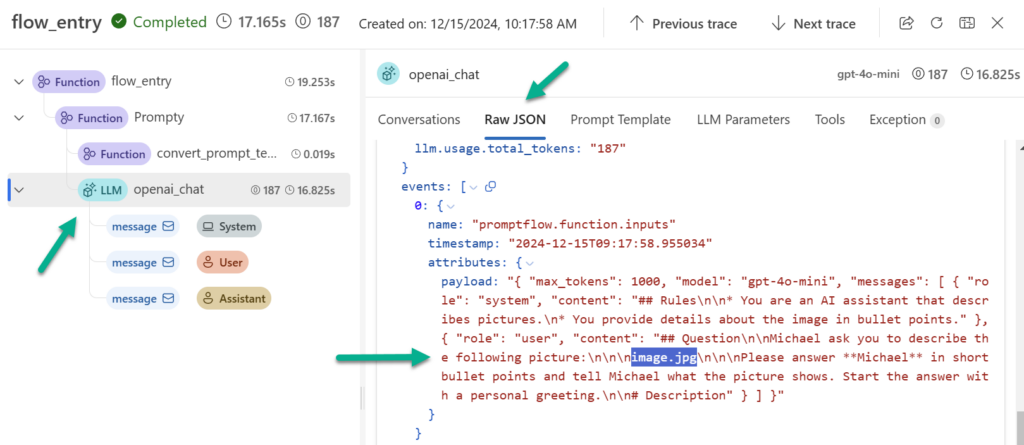

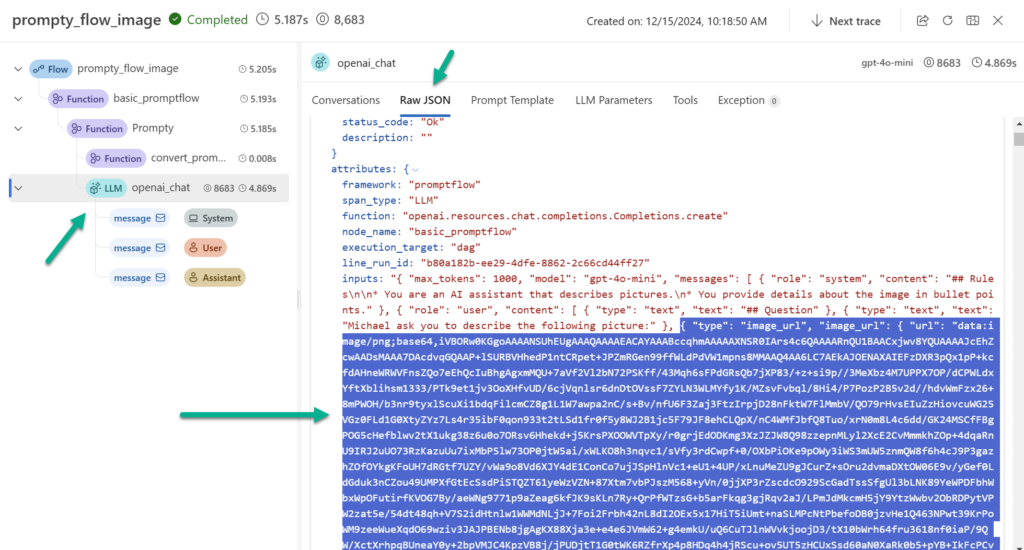

A drill down into Raw JSON view here in the Trace Viewer LLM information shows me exactly what I already thought:

My assumption is correct. In the upper screenshot I see the difference compared to the next screenshot. The request from VS Code Prompt flow contains my image data encoded as Base64:

Now, I know what my problem is. I must ensure that I include my local images correctly as Base64 data for my Prompt flow calls…

Improving the generated Prompt flow code

Let me change my code and add the local image file as image data into the request.

First, I’m importing an additional library in Python. Afterwards, I load the image from my local folder, convert it into an image object, and override the argument image:

if __name__ = "__main__":

json_input = """{

"image": "image.jpg",

"userName": "Michael"

}"""

args = json.loads(json_input)

# import library

from promptflow.contracts.multimedia import Image

# load the image and convert to Image object

image = Image(value=open(folder + "/" + args["image"], "rb").read())

args["image"] = imageNow, I’m running my improved code, and I see this result:

Well done, this answer contains now details to my given picture.

Summary

Troubleshooting can be a long journey. You have seen this from my example where automatically generated code caused a problem. Moreover, this issue could be overlooked very quickly because my AI solution used an LLM to describe a provided image in a long and personalized description. This means, do not trust LLM answers blindly!

Today, I showed you also how I started analyzing this problem. These steps can be helpful for you to detect similar issues. Firstly, I simplified my AI solution scenario. I did this to have a clear test scenario. Secondly, I checked every single step of my solution. I started with Prompty in VS Code, continued with Prompt flow in VS Code, and ran finally the generated code in Python. Furthermore, I used the debugger and reviewed the trace information of my solution. This helped me to locate the root cause of my problem.

With this information, I was able to fix my broken Python code. In other words, now I provide the given image correctly as Base64 image data to my LLM. Under the bottom line, a small issue caused a huge problem that I detected by using my pro developer tools. Finally, I have learned to integrate my images correctly in the automatically generated Python code for Prompt flow.